pulse2percept is an open-source Python simulation framework used to predict the perceptual experience of retinal prosthesis patients across a wide range of implant configurations.

Michael Beyeler

(he/him)

Associate Professor

Computer Science

Psychological & Brain Sciences

University of California, Santa Barbara

Dr. Michael Beyeler is Associate Professor of Computer Science and Psychological & Brain Sciences at UC Santa Barbara, where he directs the Bionic Vision Lab. His research combines computational neuroscience, AI, and immersive technology to advance sight restoration for people with incurable blindness. Prior to joining UCSB in 2019, he completed a postdoctoral fellowship at the University of Washington and earned his PhD in Computer Science from UC Irvine. He also holds degrees in Electrical and Biomedical Engineering from ETH Zurich.

Dr. Beyeler serves as Associate Director of UC Santa Barbara’s Center for Virtual Environments and Behavior (ReCVEB). His work has been recognized by the National Institutes of Health (K99/R00 and DP2 New Innovator Award) and by UC Santa Barbara with the 2024-2025 Harold J. Plous Memorial Award for exceptional contributions to research, teaching, and service.

- 3201B BioEngineering

- mbeyeler@ucsb.edu

Honors & Awards

- Harold J. Plous Memorial Award, University of California, Santa Barbara (2024)

- DP2 New Innovator Award, National Institutes of Health (NIH) (2022)

- K99/R00 Pathway to Independence Award, , National Institutes of Health (NIH) (2018)

Education

-

PhD in Computer Science, 2016

University of California, Irvine

-

MS in Biomedical Engineering, 2011

ETH Zurich, Switzerland

-

BS in Electrical Engineering, 2009

ETH Zurich, Switzerland

Publications

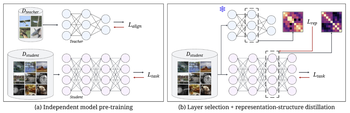

BIRD: Behavior induction via representation-structure distillation

We introduce BIRD (Behavior Induction via Representation-structure Distillation), a flexible framework for transferring aligned behavior by matching the internal representation structure of a student model to that of a teacher.

Galen Pogoncheff, Michael Beyeler The 14th International Conference on Learning Representations (ICLR ‘26)

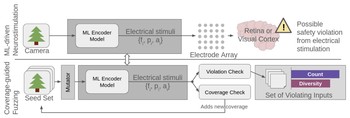

Fuzzing the brain: Automated stress testing for the safety of ML-driven neurostimulation

We propose a systematic, quantitative approach to detect and characterize unsafe stimulation patterns in ML-driven neurostimulation systems.

Mara Downing, Matthew Peng, Jacob Granley, Michael Beyeler, Tevfik Bultan Journal of Neural Engineering

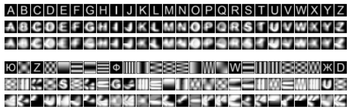

SymbolSight: Minimizing inter-symbol interference for reading with prosthetic vision

We present SymbolSight, a computational framework that selects symbol-to-letter mappings to minimize confusion among frequently adjacent letters. Using simulated prosthetic vision (SPV) and a neural proxy observer, we estimate pairwise symbol confusability and optimize assignments using language-specific bigram statistics.

Jasmine Lesner, Michael Beyeler arXiv

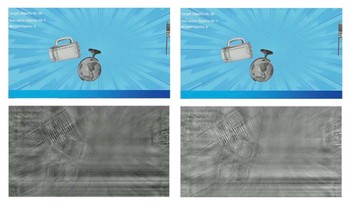

Network-adaptive cloud preprocessing for visual neuroprostheses

We present a network-adaptive pipeline for cloud-assisted visual preprocessing of artificial vision, where real-time round-trip-time (RTT) feedback is used to dynamically modulate image resolution, compression, and transmission rate, explicitly prioritizing temporal continuity under adverse network conditions.

Jiayi Liu, Yilin Wang, Michael Beyeler arXiv

Gamification enhances user engagement and task performance in prosthetic vision testing

We found that gamification can influence measured performance and user experience in prosthetic vision testing, but benefits are not universal and depend on task demands and cognitive load.

Lily M. Turkstra, Byron A. Johnson, Arathy Kartha, Gislin Dagnelie, Michael Beyeler medRxiv

(Note: LMT and BAJ contributed equally to this work.)

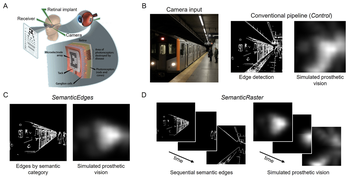

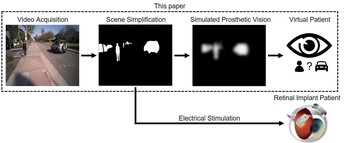

Static or temporal? Semantic scene simplification to aid wayfinding in immersive simulations of bionic vision

We compare two complementary approaches to semantic preprocessing in immersive virtual reality: SemanticEdges, which highlights all relevant objects at once, and SemanticRaster, which staggers object categories over time to reduce visual clutter.

Justin M. Kasowski, Apurv Varshney, Michael Beyeler 31st ACM Symposium on Virtual Reality Software and Technology (VRST) ‘25

Look, predict, intercept: Visual exposure seeds model-based control in moving-target interception

When intercepting disappearing moving targets, we found that humans use a two-stage, effector-invariant interception strategy in which brief visual exposure seeds a predictive controller that allows action to continue when visual information is lost.

Lauren T. Eckhardt, Anvitha Akkaraju, Tori N. LeVier, Justin M. Kasowski, Michael Beyeler PsyArXiv 56jsn_v1

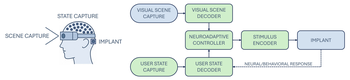

Bionic vision as neuroadaptive XR: Closed-loop perceptual interfaces for neurotechnology

Rather than pursuing a (degraded) imitation of natural sight, bionic vision might be better understood as a form of neuroadaptive XR: a perceptual interface that forgoes visual fidelity in favor of delivering sparse, personalized cues shaped (at its full potential) by user intent, behavioral context, and cognitive state.

Michael Beyeler 2025 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

Perceptual learning of prosthetic vision using video game training

We evaluated whether gamified training improves compensation for population-coding distortions in sight recovery by testing transfer of learning between a dichoptic object recognition task and a filtered version of Fruit Ninja, finding no significant transfer and suggesting limited generalizability of gamification-based rehabilitation.

Rebecca B. Esquenazi, Kimberly Meier, Michael Beyeler, Drake Wright, Geoffrey M. Boynton, Ione Fine Journal of Vision 25(12), 1-14

Deep learning-based control of electrically evoked activity in human visual cortex

We developed a data-driven neural control framework for a visual cortical prosthesis in a blind human, showing that deep learning can synthesize efficient, stable stimulation patterns that reliably evoke percepts and outperform conventional calibration methods.

Pehuén Moure, Jacob Granley, Fabrizio Grani, Leili Soo, Antonio Lozano, Rocio López-Peco, Adrián Villamarin-Ortiz, Cristina Soto-Sánchez, Shih-Chii Liu, Michael Beyeler, Eduardo Fernández bioRxiv

(Note: PM, JG, and FG are co-first authors. SL, MB, and EF are co-last authors.)

Mouse vs. AI: A neuroethological benchmark for visual robustness and neural alignment

We propose the Mouse vs. AI: Robust Foraging Competition at NeurIPS ‘25, a novel bioinspired visual robustness benchmark to test generalization in reinforcement learning (RL) agents trained to navigate a virtual environment toward a visually cued target.

Marius Schneider, Joe Canzano, Jing Peng, Yuchen Hou, Spencer LaVere Smith, Michael Beyeler arXiv:2509.14446

Distinct roles of central and peripheral vision in rapid scene understanding

We used a real-time, gaze-contingent simulation to examine how central vision loss and peripheral vision loss alter eye movements and scene understanding.

Byron A. Johnson, Ansh K. Soni, Shravan Murlidaran, Michael Beyeler, Miguel P. Eckstein bioRxiv

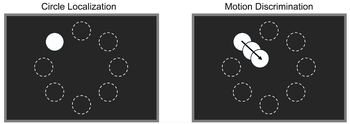

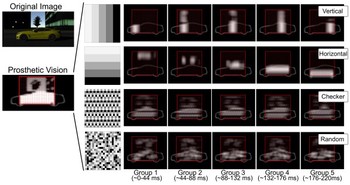

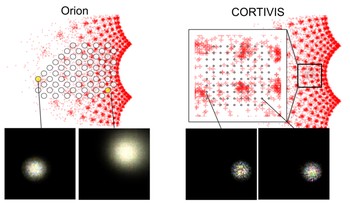

Simulated prosthetic vision confirms checkerboard as an effective raster pattern for epiretinal implants

Using an immersive VR system, we systematically evaluated two behavioral tasks under four raster patterns (horizontal, vertical, checkerboard, and random) and found checkerboard raster to be the most effective.

Justin M. Kasowski, Apurv Varshney, Roksana Sadeghi, Michael Beyeler Journal of Neural Engineering 22 046017

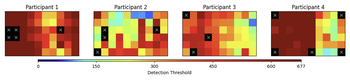

Efficient spatial estimation of perceptual thresholds for retinal implants via Gaussian process regression

We propose a Gaussian Process Regression (GPR) framework to predict perceptual thresholds at unsampled locations while leveraging uncertainty estimates to guide adaptive sampling.

Roksana Sadeghi, Michael Beyeler IEEE EMBC ‘25

Evaluating deep human-in-the-loop optimization for retinal implants using sighted participants

We evaluate HILO using sighted participants viewing simulated prosthetic vision to assess its ability to optimize stimulation strategies under realistic conditions.

Eirini Schoinas, Adyah Rastogi, Anissa Carter, Jacob Granley, Michael Beyeler IEEE EMBC ‘25

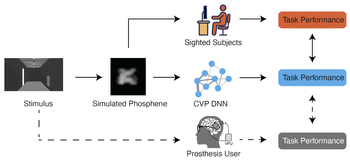

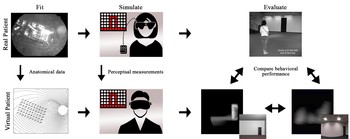

A deep learning framework for predicting functional visual performance in bionic eye users

We introduce a computational virtual patient (CVP) pipeline that integrates anatomically grounded phosphene simulation with task-optimized deep neural networks to forecast patient perceptual capabilities across diverse prosthetic designs and tasks.

Jonathan Skaza, Shravan Murlidaran, Apurv Varshney, Ziqi Wen, William Wang, Miguel P. Eckstein, Michael Beyeler bioRxiv

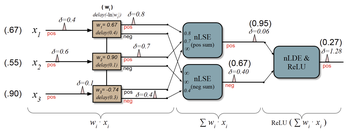

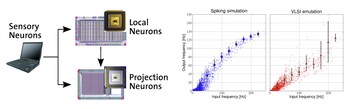

Single spike artificial neural networks

We propose a novel temporal-digital architecture that encodes ANN weights as delays and activations as signal arrival times, enabling full ANN execution with temporal reuse, noise-tolerant summation, and hybrid memory, achieving up to 11× energy and 4× latency improvements over SNNs, and 3.5× energy savings over 8-bit digital systolic arrays.

Rhys Gretsch, Michael Beyeler, Jeremy Lau, Timothy Sherwood International Symposium on Computer Architecture (ISCA) ‘25

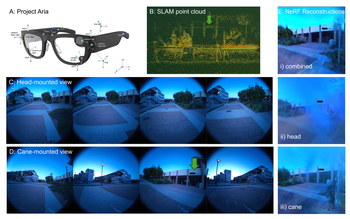

Beyond physical reach: Comparing head- and cane-mounted cameras for last-mile navigation by blind users

We evaluate head- and cane-mounted cameras for blind navigation and show that combining both yields superior spatial perception, guiding the design of hybrid, user-aligned assistive systems.

Apurv Varshney, Lucas Nadolskis, Tobias Höllerer, Michael Beyeler arXiv:2504.19345

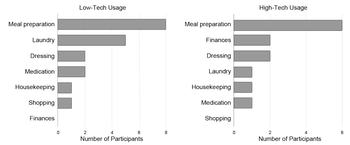

Assistive technology use in domestic activities by people who are blind

We present insights from 16 semi-structured interviews with individuals who are either legally or completely blind, highlighting both the current use and potential future applications of technologies for home-based iADLs.

Lily M. Turkstra, Tanya Bhatia, Alexa Van Os, Michael Beyeler Scientific Reports

Aligning visual prosthetic development with implantee needs

Our interview study found a significant gap between researcher expectations and implantee experiences with visual prostheses, underscoring the importance of focusing future research on usability and real-world application.

Lucas Nadolskis, Lily M. Turkstra, Ebenezer Larnyo, Michael Beyeler Translational Vision Science & Technology (TVST) 13(28)

(Note: LN and LMT contributed equally to this work.)

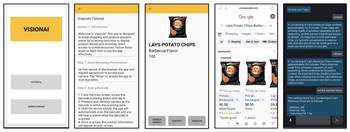

VisionAI - Shopping Assistance for People with Vision Impairments

We introduce VisionAI, a mobile application designed to enhance the in-store shopping experience for individuals with vision impairments.

Anika Arora, Lucas Nadolskis, Michael Beyeler, Misha Sra 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

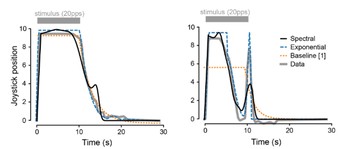

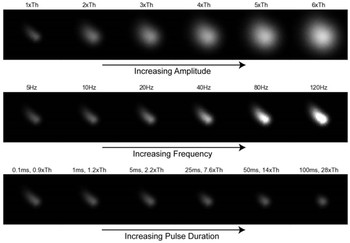

Predicting the temporal dynamics of prosthetic vision

We introduce two computational models designed to accurately predict phosphene fading and persistence under varying stimulus conditions, cross-validated on behavioral data reported by nine users of the Argus II Retinal Prosthesis System.

Yuchen Hou, Laya Pullela, Jiaxin Su, Sriya Aluru, Shivani Sista, Xiankun Lu, Michael Beyeler IEEE EMBC ‘24

(Note: YH and LP contributed equally to this work.)

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)

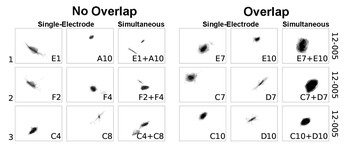

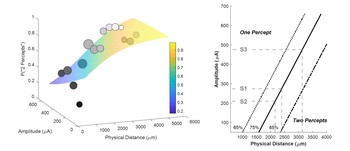

Axonal stimulation affects the linear summation of single-point perception in three Argus II users

We retrospectively analyzed phosphene shape data collected form three Argus II patients to investigate which neuroanatomical and stimulus parameters predict paired-phosphene appearance and whether phospehenes add up linearly.

Yuchen Hou, Devyani Nanduri, Jacob Granley, James D. Weiland, Michael Beyeler Journal of Neural Engineering

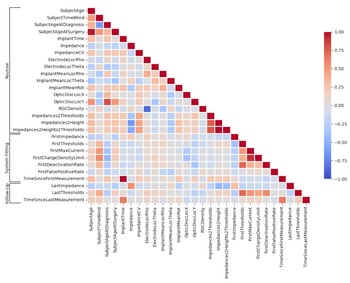

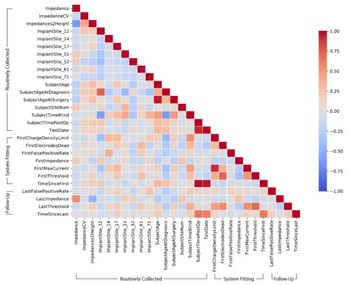

Explainable machine learning predictions of perceptual sensitivity for retinal prostheses

We present explainable artificial intelligence (XAI) models fit on a large longitudinal dataset that can predict perceptual thresholds on individual Argus II electrodes over time.

Galen Pogoncheff, Zuying Hu, Ariel Rokem, Michael Beyeler Journal of Neural Engineering

Eye tracking performance in mobile mixed reality

We conducted user studies evaluating eye tracking on the Magic Leap One, the HoloLens 2, and the Meta Quest Pro to show how locomotion influences eye tracking performance in these headsets.

Satyam Awasthi, Vivian Ross, Sydney Lim, Michael Beyeler, Tobias Höllerer IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR) ‘24

Stress affects navigation strategies in immersive virtual reality

We used immersive virtual reality to develop a novel behavioral paradigm to examine navigation under dynamically changing, high-stress situations.

Apurv Varshney, Mitchell Munns, Justin Kasowski, Mantong Zhou, Chuanxiuyue He, Scott Grafton, Barry Giesbrecht, Mary Hegarty, Michael Beyeler Scientific Reports

(Note: AV and MM contributed equally to this work.)

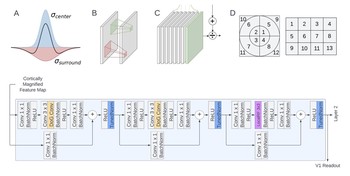

Explaining V1 properties with a biologically constrained deep learning architecture

We systematically incorporated neuroscience-derived architectural components into CNNs to identify a set of mechanisms and architectures that comprehensively explain neural activity in V1.

Galen Pogoncheff, Jacob Granley, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

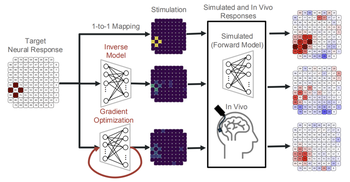

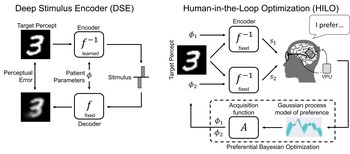

Human-in-the-loop optimization for deep stimulus encoding in visual prostheses

We propose a personalized stimulus encoding strategy that combines state-of-the-art deep stimulus encoding with preferential Bayesian optimization.

Jacob Granley, Tristan Fauvel, Matthew Chalk, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

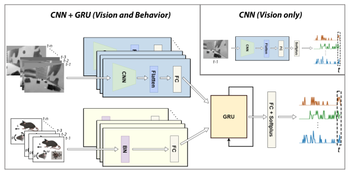

Multimodal deep learning model unveils behavioral dynamics of V1 activity in freely moving mice

We introduce a multimodal recurrent neural network that integrates gaze-contingent visual input with behavioral and temporal dynamics to explain V1 activity in freely moving mice.

Aiwen Xu, Yuchen Hou, Cristopher M. Niell, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

EyeTTS: Evaluating and Calibrating Eye Tracking for Mixed-Reality Locomotion

We developed EyeTTS, an eye tracking test suite to evaluate and compare different eye tracking devices on various augmented reality tasks and metrics, specifically for scenarios involving head movement and locomotion.

Satyam Awasthi, Vivian Ross, Michael Beyeler, Tobias Höllerer 2023 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

(Note: SA and VR contributed equally to this work.)

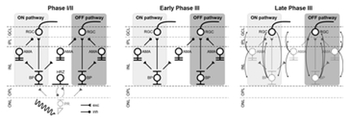

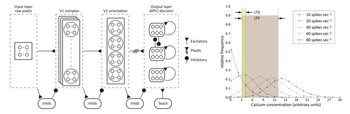

Retinal ganglion cells undergo cell type–specific functional changes in a computational model of cone-mediated retinal degeneration

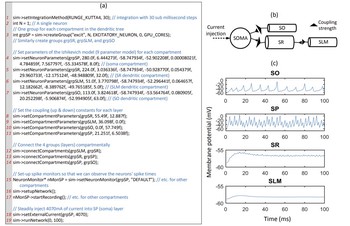

We present a biophysically detailed in silico model of retinal degeneration that simulates the network-level response to both light and electrical stimulation as a function of disease progression.

Aiwen Xu, Michael Beyeler Frontiers in Neuroscience: Special Issue “Rising Stars in Visual Neuroscience”

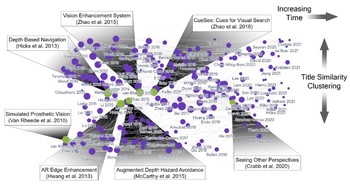

A systematic review of extended reality (XR) for understanding and augmenting vision loss

We present a systematic literature review of 227 publications from 106 different venues assessing the potential of XR technology to further visual accessibility.

Justin Kasowski, Byron A. Johnson, Ryan Neydavood, Anvitha Akkaraju, Michael Beyeler Journal of Vision 23(5):5, 1–24

(Note: JK and BAJ are co-first authors.)

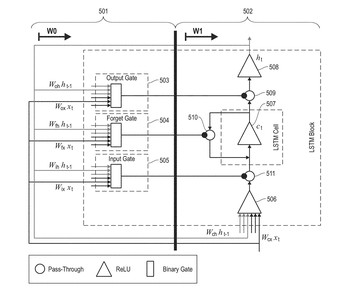

Long-short term memory (LSTM) cells on spiking neuromorphic hardware

We present a way to implement long short-term memory (LSTM) cells on spiking neuromorphic hardware.

Rathinakumar Appuswamy, Michael Beyeler, Pallab Datta, Myron Flickner, Dharmendra S Modha US Patent No. 11,636,317

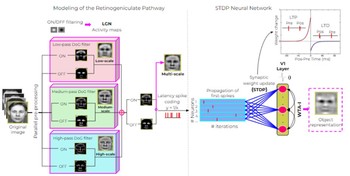

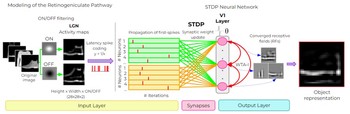

Efficient multi-scale representation of visual objects using a biologically plausible spike-latency code and winner-take-all inhibition

We present a SNN model that uses spike-latency coding and winner-take-all inhibition to efficiently represent visual objects with as little as 15 spikes per neuron.

Melani Sanchez-Garcia, Tushar Chauhan, Benoit R. Cottereau, Michael Beyeler Biological Cybernetics

(Note: MSG and TC are co-first authors. BRC and MB are co-last authors.)

Adapting brain-like neural networks for modeling cortical visual prostheses

We show that a neurologically-inspired decoding of CNN activations produces qualitatively accurate phosphenes, comparable to phosphenes reported by real patients.

Jacob Granley, Alexander Riedel, Michael Beyeler Shared Visual Representations in Human & Machine Intelligence (SVRHM) Workshop, NeurIPS ‘22

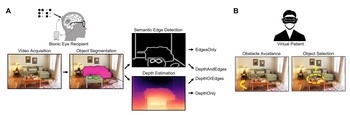

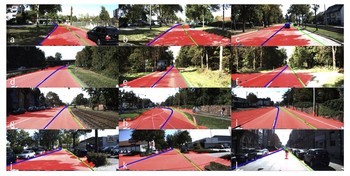

The relative importance of depth cues and semantic edges for indoor mobility using simulated prosthetic vision in immersive virtual reality

We used a neurobiologically inspired model of simulated prosthetic vision in an immersive virtual reality environment to test the relative importance of semantic edges and relative depth cues to support the ability to avoid obstacles and identify objects.

Alex Rasla, Michael Beyeler 28th ACM Symposium on Virtual Reality Software and Technology (VRST) ‘22

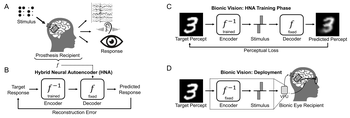

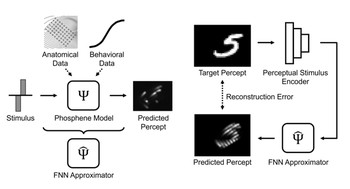

Hybrid neural autoencoders for stimulus encoding in visual and other sensory neuroprostheses

What is the required stimulus to produce a desired percept? Here we frame this as an end-to-end optimization problem, where a deep neural network encoder is trained to invert a known, fixed forward model that approximates the underlying biological system.

Jacob Granley, Lucas Relic, Michael Beyeler 36th Conference on Neural Information Processing Systems (NeurIPS) ‘22

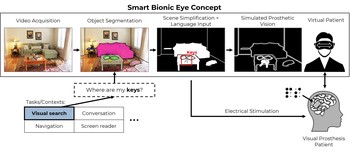

Towards a Smart Bionic Eye: AI-powered artificial vision for the treatment of incurable blindness

Rather than aiming to represent the visual scene as naturally as possible, a Smart Bionic Eye could provide visual augmentations through the means of artificial intelligence–based scene understanding, tailored to specific real-world tasks that are known to affect the quality of life of people who are blind.

Michael Beyeler, Melani Sanchez-Garcia Journal of Neural Engineering

Greedy optimization of electrode arrangement for epiretinal prostheses

We optimize electrode arrangement of epiretinal implants to maximize visual subfield coverage.

Ashley Bruce, Michael Beyeler Medical Image Computing and Computer Assisted Intervention (MICCAI) ‘22

Factors affecting two-point discrimination in Argus II patients

We explored the causes of high thresholds and poor spatial resolution within the Argus II epiretinal implant.

Ezgi I. Yücel, Roksana Sadeghi, Arathy Kartha, Sandra R. Montezuma, Gislin Dagnelie, Ariel Rokem, Geoffrey M. Boynton, Ione Fine, Michael Beyeler Frontiers in Neuroscience

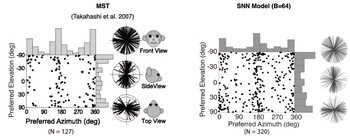

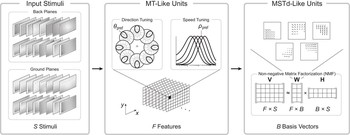

Cortical motion perception emerges from dimensionality reduction with evolved spike-timing dependent plasticity rules

We developed a spiking neural network model that showed MSTd-like response properties can emerge from evolving spike-timing dependent plasticity with homeostatic synaptic scaling (STDP-H) parameters of the connections between area MT and MSTd.

Kexin Chen, Michael Beyeler, Jeffrey L. Krichmar Journal of Neuroscience

Efficient visual object representation using a biologically plausible spike-latency code and winner-take-all inhibition

We present a SNN model that uses spike-latency coding and winner-take-all inhibition to efficiently represent visual stimuli from the Fashion MNIST dataset.

Melani Sanchez-Garcia, Tushar Chauhan, Benoit R. Cottereau, Michael Beyeler NeuroVision Workshop, IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) ‘22

Deep learning-based perceptual stimulus encoder for bionic vision

We propose a perceptual stimulus encoder based on convolutional neural networks that is trained in an end-to-end fashion to predict the electrode activation patterns required to produce a desired visual percept.

Lucas Relic, Bowen Zhang, Yi-Lin Tuan, Michael Beyeler ACM Augmented Humans (AHs) ‘22

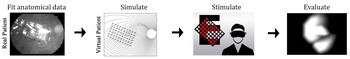

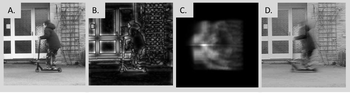

Immersive virtual reality simulations of bionic vision

We present VR-SPV, an open-source virtual reality toolbox for simulated prosthetic vision that uses a psychophysically validated computational model to allow sighted participants to ‘see through the eyes’ of a bionic eye user.

Justin Kasowski, Michael Beyeler ACM Augmented Humans (AHs) ‘22

Learning to see again: Perceptual learning of simulated abnormal on- off-cell population responses in sighted individuals

We show that sighted individuals can learn to adapt to the unnatural on- and off-cell population responses produced by electronic and optogenetic sight recovery technologies.

Rebecca B. Esquenazi, Kimberly Meier, Michael Beyeler, Geoffrey M. Boynton, Ione Fine Journal of Vision 21(10)

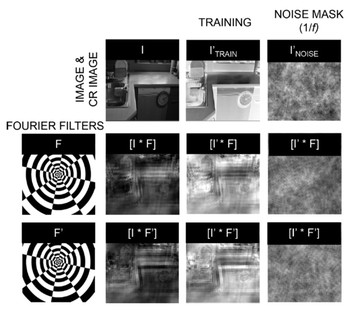

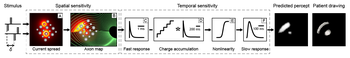

A computational model of phosphene appearance for epiretinal prostheses

We present a phenomenological model that predicts phosphene appearance as a function of stimulus amplitude, frequency, and pulse duration.

Jacob Granley, Michael Beyeler IEEE Engineering in Medicine and Biology Society Conference (EMBC) ‘21

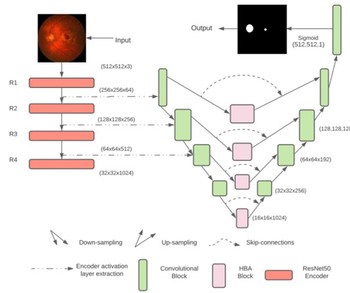

U-Net with hierarchical bottleneck attention for landmark detection in fundus images of the degenerated retina

We propose HBA-U-Net: a U-Net backbone with hierarchical bottleneck attention to highlight retinal abnormalities that may be important for fovea and optic disc segmentation in the degenerated retina.

Shuyun Tang, Ziming Qi, Jacob Granley, Michael Beyeler MICCAI Workshop on Ophthalmic Image Analysis - OMIA ‘21

Explainable AI for retinal prostheses: Predicting electrode deactivation from routine clinical measures

We present an explainable artificial intelligence (XAI) model fit on a large longitudinal dataset that can predict electrode deactivation in Argus II.

Zuying Hu, Michael Beyeler IEEE EMBS Conference on Neural Engineering (NER) ‘21

Towards immersive virtual reality simulations of bionic vision

We propose to embed biologically realistic models of simulated prosthetic vision in immersive virtual reality so that sighted subjects can act as ‘virtual patients’ in real-world tasks.

Justin Kasowski, Nathan Wu, Michael Beyeler ACM Augmented Humans (AHs) ‘21

Deep learning-based scene simplification for bionic vision

We combined deep learning-based scene simplification strategies with a psychophysically validated computational model of the retina to generate realistic predictions of simulated prosthetic vision.

Nicole Han, Sudhanshu Srivastava, Aiwen Xu, Devi Klein, Michael Beyeler ACM Augmented Humans (AHs) ‘21

Model-based recommendations for optimal surgical placement of epiretinal implants

We systematically explored the space of possible implant configurations to make recommendations for optimal intraocular positioning of Argus II.

Michael Beyeler, Geoffrey M. Boynton, Ione Fine, Ariel Rokem Medical Image Computing and Computer Assisted Intervention (MICCAI) ‘19

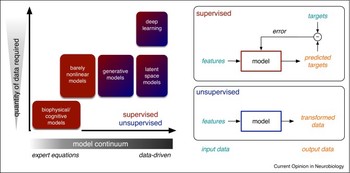

Data-driven models in human neuroscience and neuroengineering

In this review, we provide an accessible primer to modern modeling approaches and highlight recent data-driven discoveries in the domains of neuroimaging, single-neuron and neuronal population responses, and device neuroengineering.

Bingni W. Brunton, Michael Beyeler Current Opinion in Neurobiology 58:21-29

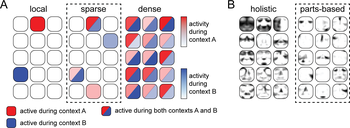

Neural correlates of sparse coding and dimensionality reduction

Brains face the fundamental challenge of extracting relevant information from high-dimensional external stimuli in order to form the neural basis that can guide an organism’s behavior and its interaction with the world. One potential approach to addressing this challenge is to reduce the number of variables required to represent a particular …

Michael Beyeler, Emily L. Rounds, Kristofor D. Carlson, Nikil Dutt, Jeffrey L. Krichmar PLOS Computational Biology 15(6):e1006908

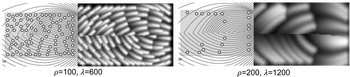

A model of ganglion axon pathways accounts for percepts elicited by retinal implants

We show that the perceptual experience of retinal implant users can be accurately predicted using a computational model that simulates each individual patient’s retinal ganglion axon pathways.

Michael Beyeler, Devyani Nanduri, James D. Weiland, Ariel Rokem, Geoffrey M. Boynton, Ione Fine Scientific Reports 9(1):9199

Biophysical model of axonal stimulation in epiretinal visual prostheses

To investigate the effect of axonal stimulation on the retinal response, we developed a computational model of a small population of morphologically and biophysically detailed retinal ganglion cells, and simulated their response to epiretinal electrical stimulation. We found that activation thresholds of ganglion cell somas and axons varied …

Michael Beyeler IEEE/EMBS Conference on Neural Engineering (NER) ‘19

Commentary: Detailed visual cortical responses generated by retinal sheet transplants in rats with severe retinal degeneration

A Commentary on: Detailed Visual Cortical Responses Generated by Retinal Sheet Transplants in Rats with Severe Retinal Degeneration by AT Foik et al. (2018).

Michael Beyeler Frontiers in Neuroscience 13:471

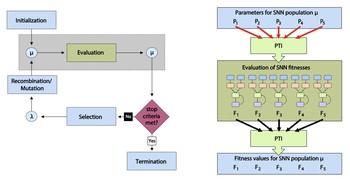

CARLsim 4: An open source library for large scale, biologically detailed spiking neural network simulation using heterogeneous clusters

We have developed CARLsim 4, a user-friendly SNN library written in C++ that can simulate large biologically detailed neural networks. Improving on the efficiency and scalability of earlier releases, the present release allows for the simulation using multiple GPUs and multiple CPU cores concurrently in a heterogeneous computing cluster. …

Ting-Shou Chou, Hirak J. Kashyap, Jinwei Xing, Stanislav Listopad, Emily L. Rounds, Michael Beyeler, Nikil Dutt, Jeffrey L. Krichmar Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN)

Learning to see again: Biological constraints on cortical plasticity and the implications for sight restoration technologies

The goal of this review is to summarize the vast basic science literature on developmental and adult cortical plasticity with an emphasis on how this literature might relate to the field of prosthetic vision.

Michael Beyeler, Ariel Rokem, Geoffrey M. Boynton, Ione Fine Journal of Neural Engineering 14(5)

pulse2percept: A Python-based simulation framework for bionic vision

pulse2percept is an open-source Python simulation framework used to predict the perceptual experience of retinal prosthesis patients across a wide range of implant configurations.

Michael Beyeler, Geoffrey M. Boynton, Ione Fine, Ariel Rokem Python in Science Conference (SciPy) ‘17

3D visual response properties of MSTd emerge from an efficient, sparse population code

Using a dimensionality reduction technique known as non-negative matrix factorization, we found that a variety of medial superior temporal (MSTd) neural response properties could be derived from MT-like input features. The responses that emerge from this technique, such as 3D translation and rotation selectivity, spiral tuning, and heading …

Michael Beyeler, Nikil Dutt, Jeffrey L. Krichmar Journal of Neuroscience 36(32): 8399-8415

A GPU-accelerated cortical neural network model for visually guided robot navigation

We present a cortical neural network model for visually guided navigation that has been embodied on a physical robot exploring a real-world environment. The model includes a rate based motion energy model for area V1, and a spiking neural network model for cortical area MT. The model generates a cortical representation of optic flow, determines the …

Michael Beyeler, Nicolas Oros, Nikil Dutt, Jeffrey L. Krichmar Neural Networks 72: 75-87

CARLsim 3: A user-friendly and highly optimized library for the creation of neurobiologically detailed spiking neural networks

We have developed CARLsim 3, a user-friendly, GPU-accelerated SNN library written in C/C++ that is capable of simulating biologically detailed neural models. The present release of CARLsim provides a number of improvements over our prior SNN library to allow the user to easily analyze simulation data, explore synaptic plasticity rules, and automate …

Michael Beyeler, Kristofor D. Carlson, Ting-Shou Chou, Nikil Dutt, Jeffrey L. Krichmar Proceedings of the 2015 International Joint Conference on Neural Networks (IJCNN)

Vision-based robust road lane detection in urban environments

This paper presents an integrative approach to ego-lane detection that aims to be as simple as possible to enable real-time computation while being able to adapt to a variety of urban and rural traffic scenarios. The approach at hand combines and extends a road segmentation method in an illumination-invariant color image, lane markings detection …

Michael Beyeler, Florian Mirus, Alexander Verl Proceedings of the 2014 International Conference on Robotics and Automation (ICRA)

Efficient spiking neural network model of pattern motion selectivity in visual cortex

We present a two-stage model of visual area MT that we believe to be the first large-scale spiking network to demonstrate pattern direction selectivity. In this model, component-direction-selective (CDS) cells in MT linearly combine inputs from V1 cells that have spatiotemporal receptive fields according to the motion energy model of Simoncelli and …

Michael Beyeler, Micah Richert, Nikil Dutt, Jeffrey L. Krichmar Neuroinformatics 12(3): 435-454

GPGPU accelerated simulation and parameter tuning for neuromorphic applications

We describe a simulation environment that can be used to design, construct, and run spiking neural networks (SNNs) quickly and efficiently using graphics processing units (GPUs). We then explain how the design of the simulation environment utilizes the parallel processing power of GPUs to simulate large-scale SNNs and describe recent modeling …

Kristofor D. Carlson, Michael Beyeler, Nikil Dutt, Jeffrey L. Krichmar Proceedings of the 2014 Asia and South Pacific Design Automation Conference (ASP-DAC)

Categorization and decision-making in a neurobiologically plausible spiking network using a STDP-like plasticity rule

We present a large-scale model of a hierarchical spiking neural network (SNN) that integrates a low-level memory encoding mechanism with a higher-level decision process to perform a visual classification task in real-time. The model consists of Izhikevich neurons and conductance-based synapses for realistic approximation of neuronal dynamics, a …

Michael Beyeler, Nikil Dutt, Jeffrey L. Krichmar Neural Networks 48:109-124

Exploring olfactory sensory networks: Simulations and hardware emulation

Olfactory stimuli are represented in a high-dimensional space by neural networks of the olfactory system. While a number of studies have illustrated the importance of inhibitory networks within the olfactory bulb or the antennal lobe for the shaping and processing of olfactory information, it is not clear how exactly these inhibitory networks are …

Michael Beyeler, Fabio Stefanini, Henning Proske, Giovanni Galizia, Elisabetta Chicca Proceedings of the 2010 IEEE Biomedical Circuits and Systems Conference (BioCAS)