Deep learning-based perceptual stimulus encoder for bionic vision

Best Poster Award

Abstract

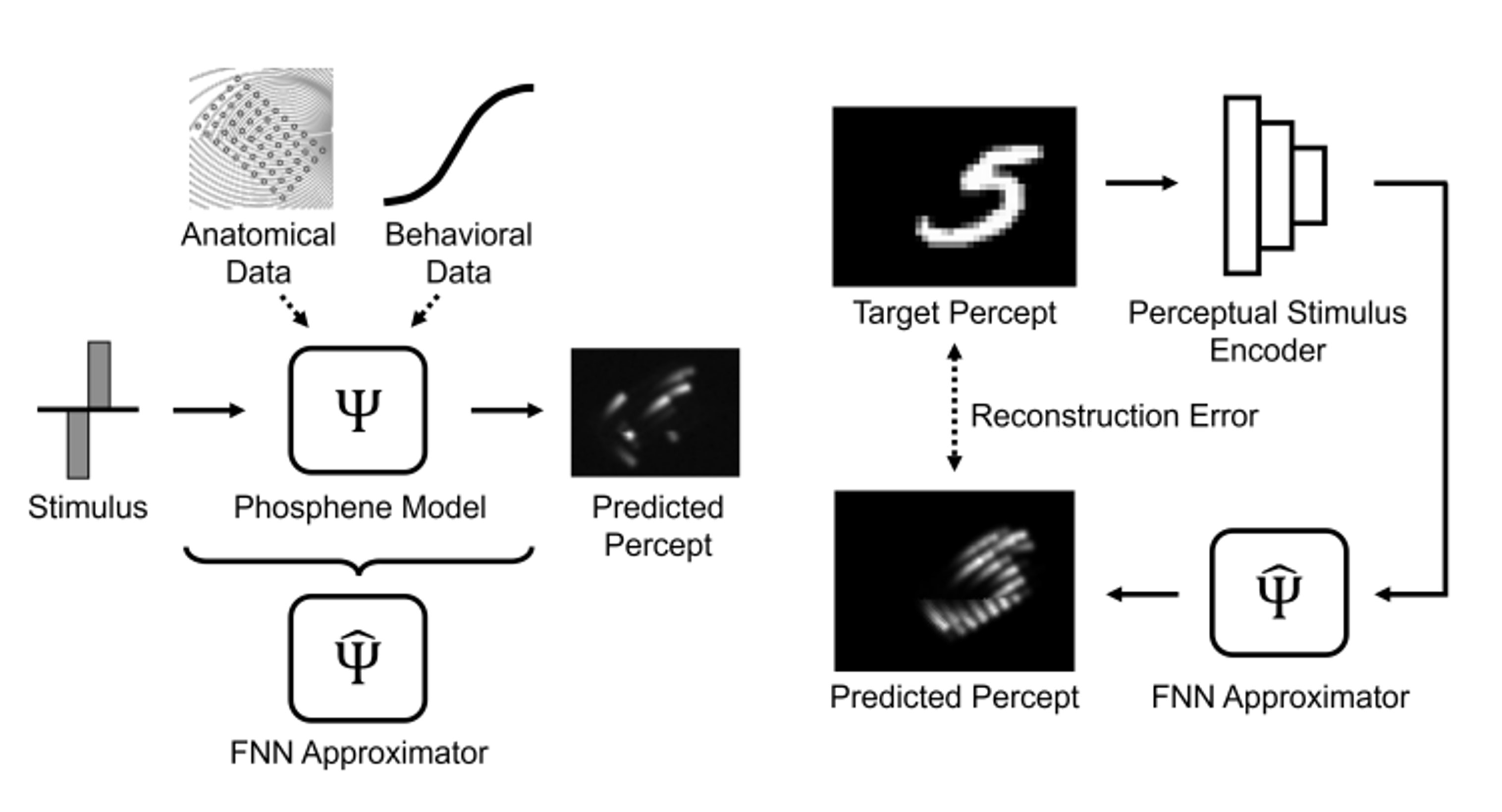

Retinal implants have the potential to treat incurable blindness, yet the quality of the artificial vision they produce is still rudimentary. An outstanding challenge is identifying electrode activation patterns that lead to intelligible visual percepts (phosphenes). Here we propose a perceptual stimulus encoder (PSE) based on convolutional neural networks (CNNs) that is trained in an end-to-end fashion to predict the electrode activation patterns required to produce a desired visual percept. We demonstrate the effectiveness of the encoder on MNIST using a psychophysically validated phosphene model tailored to individual retinal implant users. The present work constitutes an essential first step towards improving the quality of the artificial vision provided by retinal implants

In related news, Lucas Relic and team took home the Best Poster Award🥇at Augmented Humans '22 (@aug_humans) for their work on a deep learning-based perceptual stimulus encoder for bionic vision:

— Bionic Vision Lab (@bionicvisionlab) March 22, 2022

Preprint: https://t.co/rOZZTjFY2g

Video: https://t.co/EhqwLTzymo

This is very cool idea to get the best out of retinal or cochlar implants: create a differentiable model of the sensorium, and backpropagate to get the optimal stimulation pattern. From @bionicvisionlab https://t.co/lXMqqkftD4 pic.twitter.com/Dxr52IHGVD

— Patrick Mineault (@patrickmineault) June 5, 2022