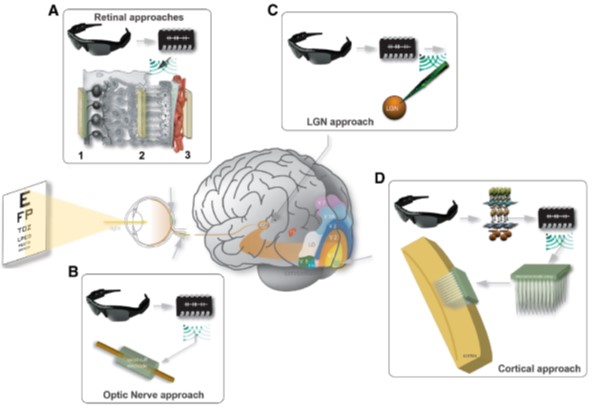

Main approaches for the design of a visual prosthesis (Fernandez, 2018) include retinal (A), optic nerve (B), lateral geniculate nucleus (LGN, C), and cortical approaches (D).

Rethinking sight restoration through models, data, and lived experience.

We experience the world through rich and varied forms of vision. For people who are blind or have low vision, existing tools and technologies often fall short of supporting this diversity of experience. Our goal is to understand how biological and artificial vision systems work, and to use those insights to design neurotechnologies that expand access to visual information and support greater independence.

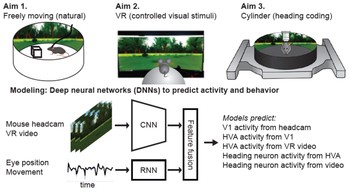

The Bionic Vision Lab tackles this challenge at the intersection of neuroscience, psychology, and computer science. We combine behavioral experiments, VR/AR, and neurophysiological methods (EEG, TMS, physiological sensing) with computational modeling, machine learning, and computer vision. This allows us to connect brain, behavior, and technology; asking how perception supports real-world action, and how artificial systems can interface with the brain to restore or augment visual function.

Our work spans three interconnected research areas:

-

Understanding perception and behavior. We study how people with low vision or blindness perceive and navigate complex environments, using psychophysics, immersive VR, and ambulatory head/eye/body tracking to link perception to real-world function.

-

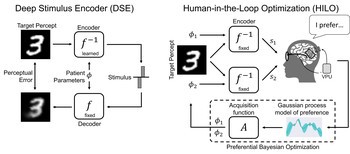

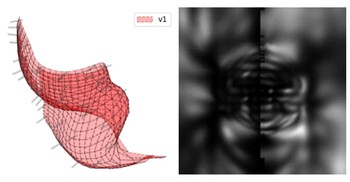

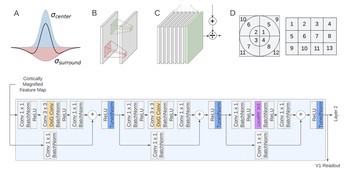

Modeling neural systems and prosthetic vision. We develop biophysically informed and machine learning models of retinal and cortical stimulation, predicting what visual prosthesis users see and designing algorithms to optimize stimulation strategies.

-

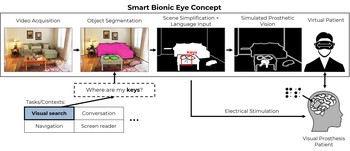

Building intelligent assistive technologies. We build real-time XR testbeds and computer vision–based assistive tools, using insights from human and animal vision to power the next generation of wearable and implantable devices.

This integrative approach welcomes students with diverse strengths: some focus on human behavior and perception, others on neural systems and computation, and others on designing interactive technologies. Together, they work toward a shared goal: creating tools and interfaces that bring meaningful, functional vision within reach.