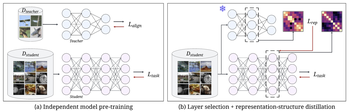

We introduce BIRD (Behavior Induction via Representation-structure Distillation), a flexible framework for transferring aligned behavior by matching the internal representation structure of a student model to that of a teacher.

NeuroAI Models of the Visual System

Understanding the early visual system in health and disease is a key issue for neuroscience and neuroengineering applications such as visual prostheses.

Although the processing of visual information in the healthy retina and early visual cortex (EVC) has been studied in detail, no comprehensive computational model exists that captures the many cell-level and network-level biophysical changes common to retinal degenerative diseases and other sources of visual impairment.

To address this challenge, we are developing computational models of the retina and EVC to elucidate the neural code of vision.

Project Leads:

PhD Student

PhD Student

Project Affiliate:

PhD Student

Principal Investigator:

Assistant Professor

Publications

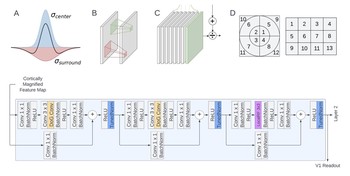

Explaining V1 properties with a biologically constrained deep learning architecture

We systematically incorporated neuroscience-derived architectural components into CNNs to identify a set of mechanisms and architectures that comprehensively explain neural activity in V1.

Galen Pogoncheff, Jacob Granley, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

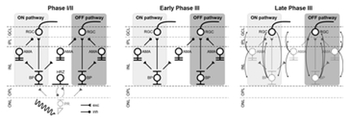

Retinal ganglion cells undergo cell type–specific functional changes in a computational model of cone-mediated retinal degeneration

We present a biophysically detailed in silico model of retinal degeneration that simulates the network-level response to both light and electrical stimulation as a function of disease progression.

Aiwen Xu, Michael Beyeler Frontiers in Neuroscience: Special Issue “Rising Stars in Visual Neuroscience”

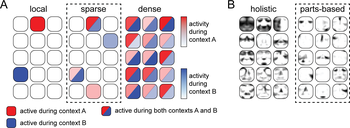

Neural correlates of sparse coding and dimensionality reduction

Brains face the fundamental challenge of extracting relevant information from high-dimensional external stimuli in order to form the neural basis that can guide an organism’s behavior and its interaction with the world. One potential approach to addressing this challenge is to reduce the number of variables required to represent a particular …

Michael Beyeler, Emily L. Rounds, Kristofor D. Carlson, Nikil Dutt, Jeffrey L. Krichmar PLOS Computational Biology 15(6):e1006908

Biophysical model of axonal stimulation in epiretinal visual prostheses

To investigate the effect of axonal stimulation on the retinal response, we developed a computational model of a small population of morphologically and biophysically detailed retinal ganglion cells, and simulated their response to epiretinal electrical stimulation. We found that activation thresholds of ganglion cell somas and axons varied …

Michael Beyeler IEEE/EMBS Conference on Neural Engineering (NER) ‘19

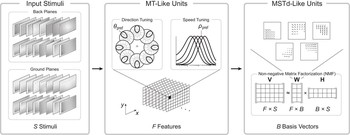

3D visual response properties of MSTd emerge from an efficient, sparse population code

Using a dimensionality reduction technique known as non-negative matrix factorization, we found that a variety of medial superior temporal (MSTd) neural response properties could be derived from MT-like input features. The responses that emerge from this technique, such as 3D translation and rotation selectivity, spiral tuning, and heading …

Michael Beyeler, Nikil Dutt, Jeffrey L. Krichmar Journal of Neuroscience 36(32): 8399-8415

Efficient spiking neural network model of pattern motion selectivity in visual cortex

We present a two-stage model of visual area MT that we believe to be the first large-scale spiking network to demonstrate pattern direction selectivity. In this model, component-direction-selective (CDS) cells in MT linearly combine inputs from V1 cells that have spatiotemporal receptive fields according to the motion energy model of Simoncelli and …

Michael Beyeler, Micah Richert, Nikil Dutt, Jeffrey L. Krichmar Neuroinform 12(3):435-454