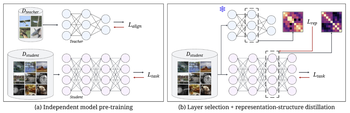

We introduce BIRD (Behavior Induction via Representation-structure Distillation), a flexible framework for transferring aligned behavior by matching the internal representation structure of a student model to that of a teacher.

Who We Are

Rethinking sight restoration through models, data, and lived experience.

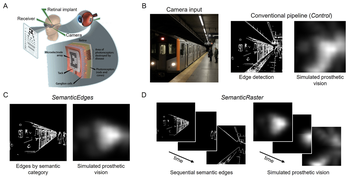

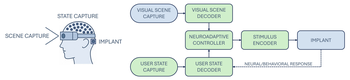

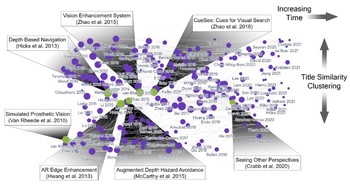

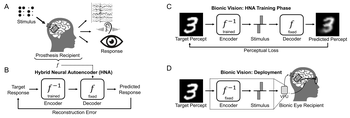

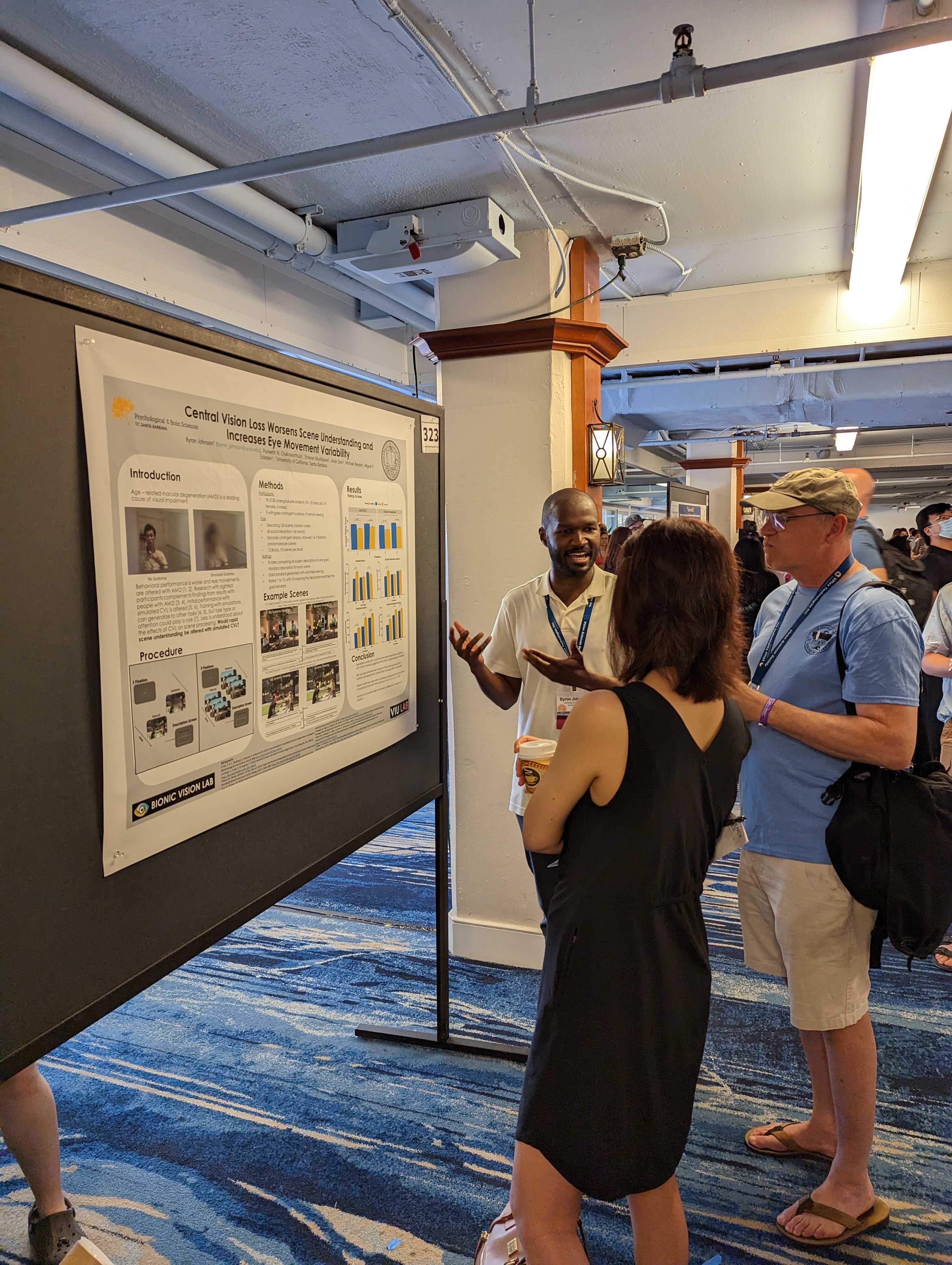

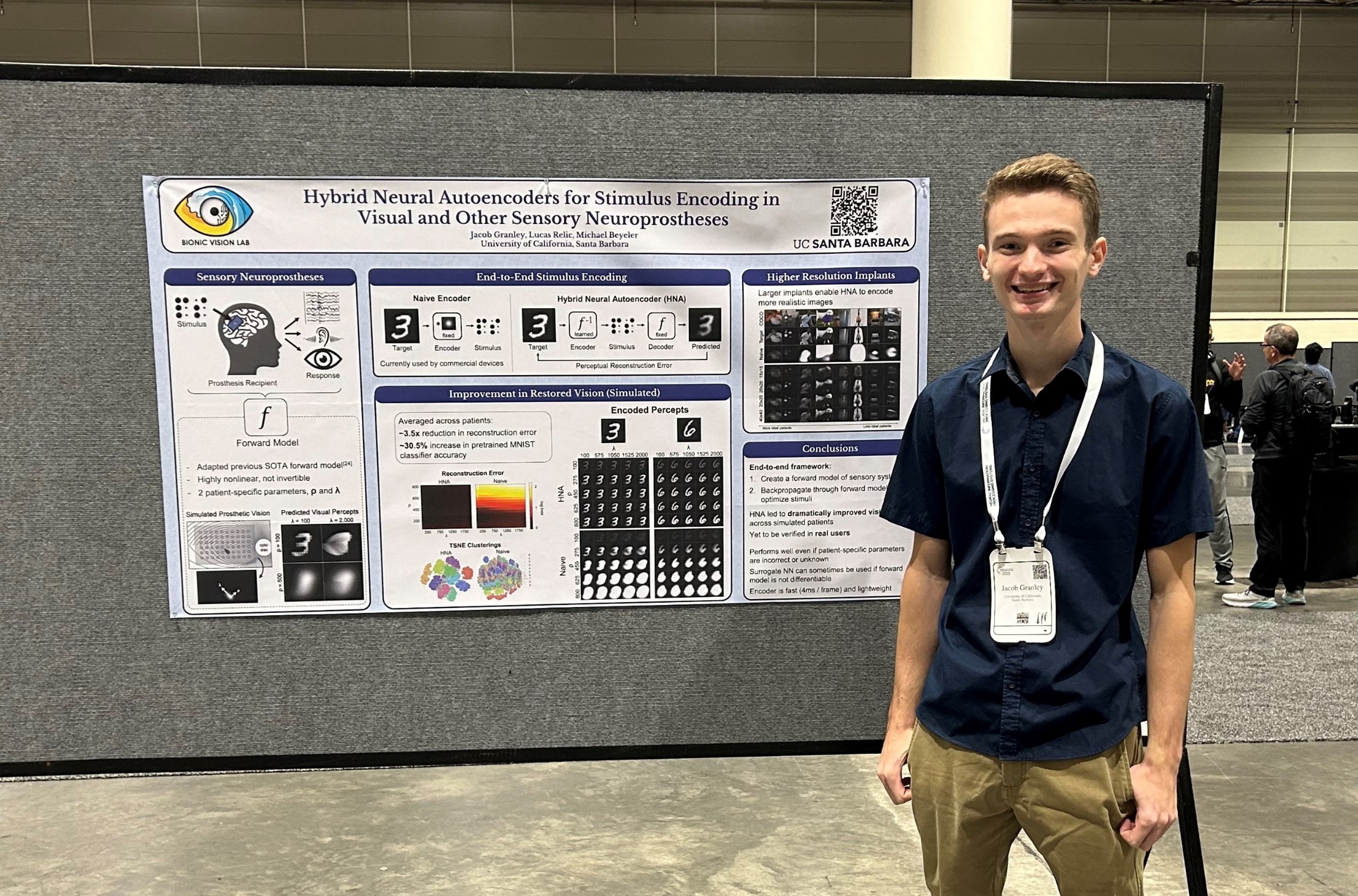

We are an interdisciplinary group exploring the science of human, animal, and artificial vision. Our mission is twofold: to understand how vision works, and to use those insights to build the next generation of visual neurotechnologies for people living with incurable blindness. This means working at the intersection of neuroscience, psychology, and computer science, where questions about how the brain sees meet advances in AI and extended reality (XR).

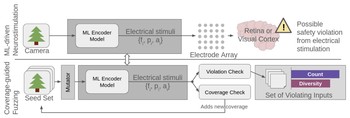

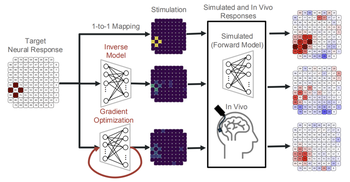

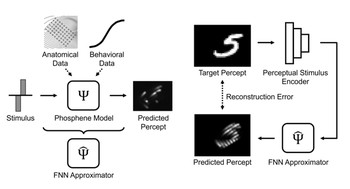

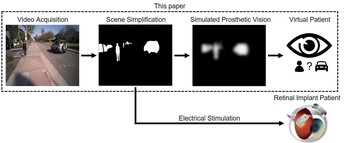

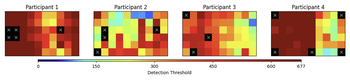

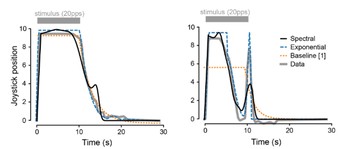

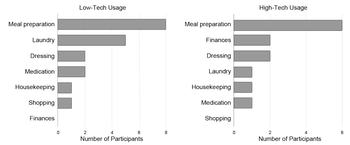

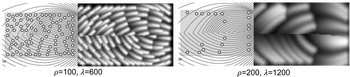

Our work spans the full spectrum from behavior to computation. We study how people with visual impairment perceive and navigate the world, using psychophysics, VR/AR, and ambulatory head/eye/body tracking. We probe visual system function with EEG, TMS, and physiological sensing. And we design biophysical and machine learning models to simulate, evaluate, and optimize visual prostheses, often embedding these models directly into real-time XR environments. This blend of approaches lets us connect brain, behavior, and technology in ways no single discipline can achieve alone.

What sets our lab apart is our close collaboration with both implant developers and bionic eye recipients. We aim to unify efforts across the field by creating open-source tools and standardized evaluation methods that can be used across devices and patient populations. Our ultimate goal is to reshape how vision restoration technologies are conceptualized, tested, and translated (while also pushing the frontiers of AI and XR) so that people with vision loss can live more independent and connected lives.