Instead of focusing on one day restoring ‘natural’ vision, we may be better off thinking about how to create ‘practical’ and ‘useful’ artificial vision now.

Deep learning-based scene simplification for bionic vision

Honorable Mention Best Paper Award

Abstract

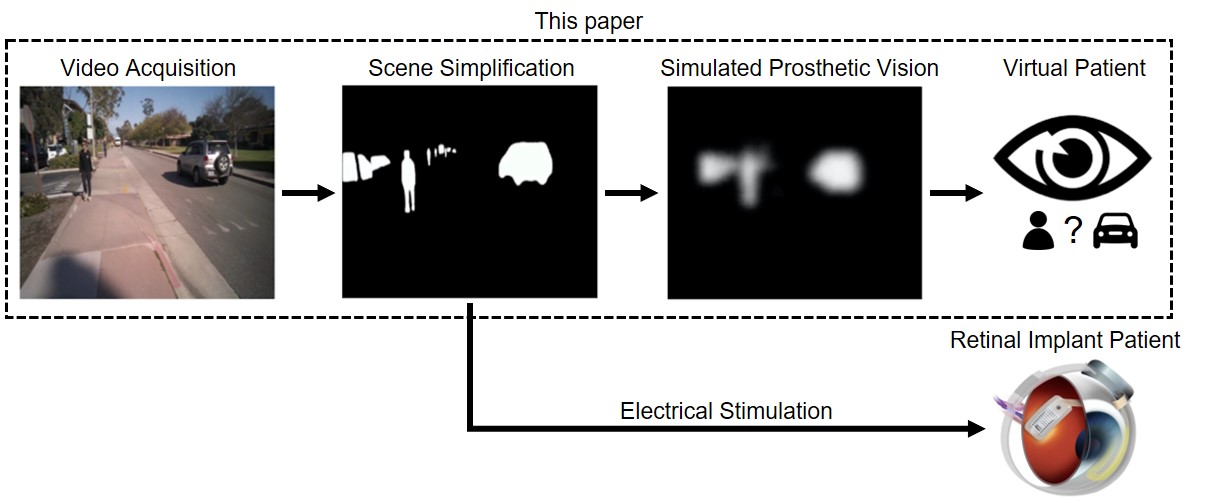

Retinal degenerative diseases cause profound visual impairment in more than 10 million people worldwide, and retinal prostheses are being developed to restore vision to these individuals. Analogous to cochlear implants, these devices electrically stimulate surviving retinal cells to evoke visual percepts (phosphenes). However, the quality of current prosthetic vision is still rudimentary. Rather than aiming to restore “natural” vision, there is potential merit in borrowing state-of-the-art computer vision algorithms as image processing techniques to maximize the usefulness of prosthetic vision. Here we combine deep learning-based scene simplification strategies with a psychophysically validated computational model of the retina to generate realistic predictions of simulated prosthetic vision, and measure their ability to support scene understanding of sighted subjects (virtual patients) in a variety of outdoor scenarios. We show that object segmentation may better support scene understanding than models based on visual saliency and monocular depth estimation. In addition, we highlight the importance of basing theoretical predictions on biologically realistic models of phosphene shape. Overall, this work has the potential to drastically improve the utility of prosthetic vision for people blinded from retinal degenerative diseases.

Excited to share our lab's first paper (@aug_humans): Deep learning-based scene simplification for bionic vision https://t.co/L4R7Ui22nQ

— Bionic Vision Lab (@bionicvisionlab) February 23, 2021

We show that object segmentation may better support scene understanding than models based on visual saliency and monocular depth estimation pic.twitter.com/Sm0SjBKug6

In the News

Bionic Vision Lab featured in NVIDIA’s I AM AI trailer

Our recent research was featured in NVIDIA’s I AM AI trailer, premiered at NVIDIA GTC 2021

Nicole Han, Sudhanshu Srivastava, xu_aiwen, Devi Klein, Michael Beyeler