Beyond sight: Probing alignment between image models and blind V1

(Note: JG and GP contributed equally to this work.)

Spotlight Talk

Abstract

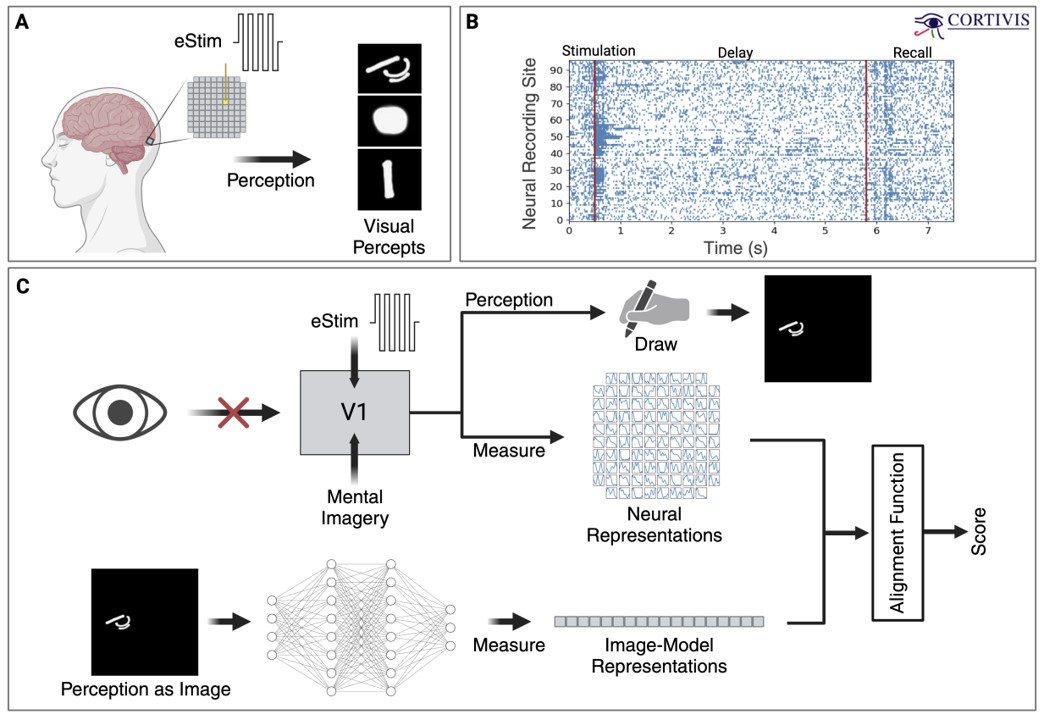

Neural activity in the visual cortex of blind humans persists in the absence of visual stimuli. However, little is known about the preservation of visual representation capacity in these cortical regions, which could have significant implications for neural interfaces such as visual prostheses. In this work, we present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex (V1) of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks. In the absence of natural visual input, we examine two alternative forms of inducing neural activity: electrical stimulation and mental imagery. We first quantitatively demonstrate that latent DNN activations are aligned with neural activity measured in blind V1. On average, DNNs with higher ImageNet accuracy or higher sighted primate neural predictivity are more predictive of blind V1 activity. We further probe blind V1 alignment in ResNet-50 and propose a proof-of-concept approach towards interpretability of blind V1 neurons. The results of these studies suggest the presence of some form of natural visual processing in blind V1 during electrically evoked visual perception and present unique directions in mechanistically understanding and interfacing with blind V1.