BionicVisionXR is an open-source virtual reality toolbox for simulated prosthetic vision that uses a psychophysically validated computational model to allow sighted participants to “see through the eyes” of a bionic eye recipient.

Topic: Visual Prosthesis

Researchers Interested in This Topic

Ally Chu

BS/MS Student

Jacob Granley

PhD Candidate

Yuchen Hou

PhD Student

Byron A. Johnson

PhD Candidate

Lucas Nadolskis

PhD Student

Galen Pogoncheff

PhD Student

Lily M. Turkstra

PhD Student

Apurv Varshney

PhD Student

Research Projects

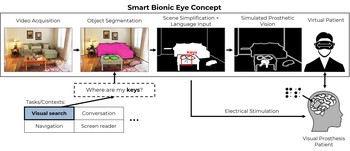

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision, we might be better off thinking about how to create practical and useful artificial vision now.

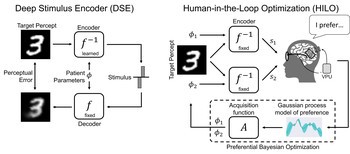

End-to-End Optimization of Bionic Vision

Rather than predicting perceptual distortions, one needs to solve the inverse problem: What is the best stimulus to generate a desired visual percept?

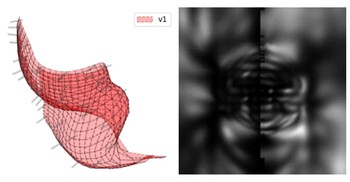

Predicting Visual Outcomes for Visual Prostheses

What do visual prosthesis users see, and why? Clinical studies have shown that the vision provided by current devices differs substantially from normal sight.

pulse2percept: A Python-Based Simulation Framework for Bionic Vision

pulse2percept is an open-source Python simulation framework used to predict the perceptual experience of retinal prosthesis patients across a wide range of implant configurations.