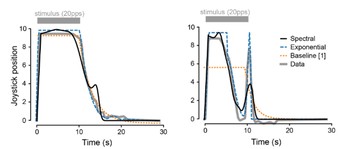

We introduce two computational models designed to accurately predict phosphene fading and persistence under varying stimulus conditions, cross-validated on behavioral data reported by nine users of the Argus II Retinal Prosthesis System.

Predicting Visual Outcomes for Visual Prostheses

A major outstanding challenge is predicting what people “see” when they use their devices.

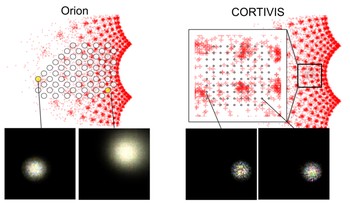

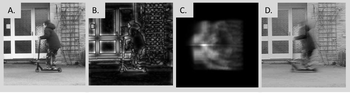

Instead of seeing focal spots of light, current visual implant users perceive highly distorted percepts, which vary in shape not just across subjects but also across electrodes and often fail to assemble into more complex percepts. Furthermore, phosphenes appear fundamentally different depending on whether they are generated with retinal or cortical implants.

The goal of this project is thus to combine psychophysical and neuroanatomical data that can inform phosphene models capable of linking electrical stimulation directly to perception.

Project Leads:

Project Affiliate:

UC LEADS Scholar

Principal Investigator:

Assistant Professor

Collaborator:

Professor

Universidad Miguel Hernández, Spain

DP2-LM014268:

Towards a Smart Bionic Eye: AI-Powered Artificial Vision for the Treatment of Incurable Blindness

PI: Michael Beyeler (UCSB)

September 2022 - August 2027

Common Fund, Office of the Director (OD); National Library of Medicine (NLM)

National Institutes of Health (NIH)

Publications

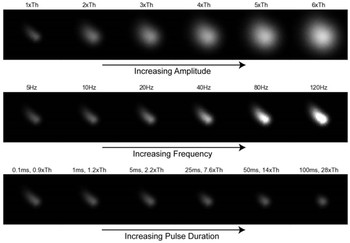

Predicting the temporal dynamics of prosthetic vision

Yuchen Hou, Laya Pullela, Jiaxin Su, Sriya Aluru, Shivani Sista, Xiankun Lu, Michael Beyeler IEEE EMBC ‘24

(Note: YH and LP contributed equally to this work.)

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)

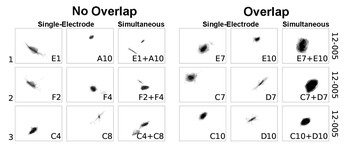

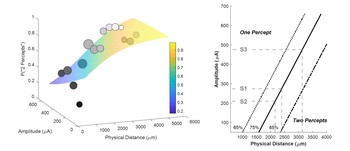

Axonal stimulation affects the linear summation of single-point perception in three Argus II users

We retrospectively analyzed phosphene shape data collected form three Argus II patients to investigate which neuroanatomical and stimulus parameters predict paired-phosphene appearance and whether phospehenes add up linearly.

Yuchen Hou, Devyani Nanduri, Jacob Granley, James D. Weiland, Michael Beyeler Journal of Neural Engineering

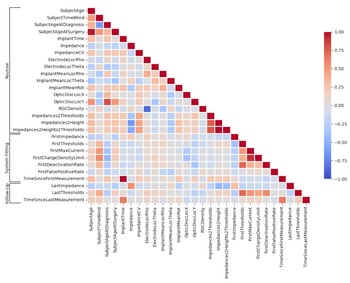

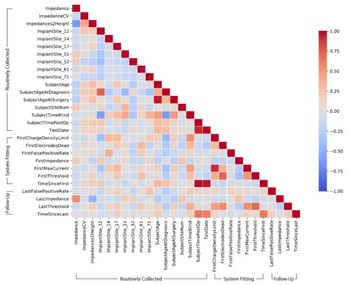

Explainable machine learning predictions of perceptual sensitivity for retinal prostheses

We present explainable artificial intelligence (XAI) models fit on a large longitudinal dataset that can predict perceptual thresholds on individual Argus II electrodes over time.

Galen Pogoncheff, Zuying Hu, Ariel Rokem, Michael Beyeler Journal of Neural Engineering

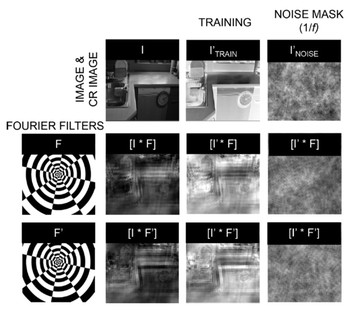

Adapting brain-like neural networks for modeling cortical visual prostheses

We show that a neurologically-inspired decoding of CNN activations produces qualitatively accurate phosphenes, comparable to phosphenes reported by real patients.

Jacob Granley, Alexander Riedel, Michael Beyeler Shared Visual Representations in Human & Machine Intelligence (SVRHM) Workshop, NeurIPS ‘22

Greedy optimization of electrode arrangement for epiretinal prostheses

We optimize electrode arrangement of epiretinal implants to maximize visual subfield coverage.

Ashley Bruce, Michael Beyeler Medical Image Computing and Computer Assisted Intervention (MICCAI) ‘22

Factors affecting two-point discrimination in Argus II patients

We explored the causes of high thresholds and poor spatial resolution within the Argus II epiretinal implant.

Ezgi I. Yücel, Roksana Sadeghi, Arathy Kartha, Sandra R. Montezuma, Gislin Dagnelie, Ariel Rokem, Geoffrey M. Boynton, Ione Fine, Michael Beyeler Frontiers in Neuroscience

Learning to see again: Perceptual learning of simulated abnormal on- off-cell population responses in sighted individuals

We show that sighted individuals can learn to adapt to the unnatural on- and off-cell population responses produced by electronic and optogenetic sight recovery technologies.

Rebecca B. Esquenazi, Kimberly Meier, Michael Beyeler, Geoffrey M. Boynton, Ione Fine Journal of Vision 21(10)

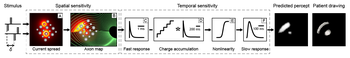

A computational model of phosphene appearance for epiretinal prostheses

We present a phenomenological model that predicts phosphene appearance as a function of stimulus amplitude, frequency, and pulse duration.

Jacob Granley, Michael Beyeler IEEE Engineering in Medicine and Biology Society Conference (EMBC) ‘21

Explainable AI for retinal prostheses: Predicting electrode deactivation from routine clinical measures

We present an explainable artificial intelligence (XAI) model fit on a large longitudinal dataset that can predict electrode deactivation in Argus II.

Zuying Hu, Michael Beyeler IEEE EMBS Conference on Neural Engineering (NER) ‘21

Model-based recommendations for optimal surgical placement of epiretinal implants

We systematically explored the space of possible implant configurations to make recommendations for optimal intraocular positioning of Argus II.

Michael Beyeler, Geoffrey M. Boynton, Ione Fine, Ariel Rokem Medical Image Computing and Computer Assisted Intervention (MICCAI) ‘19

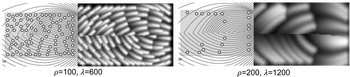

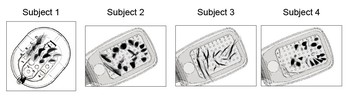

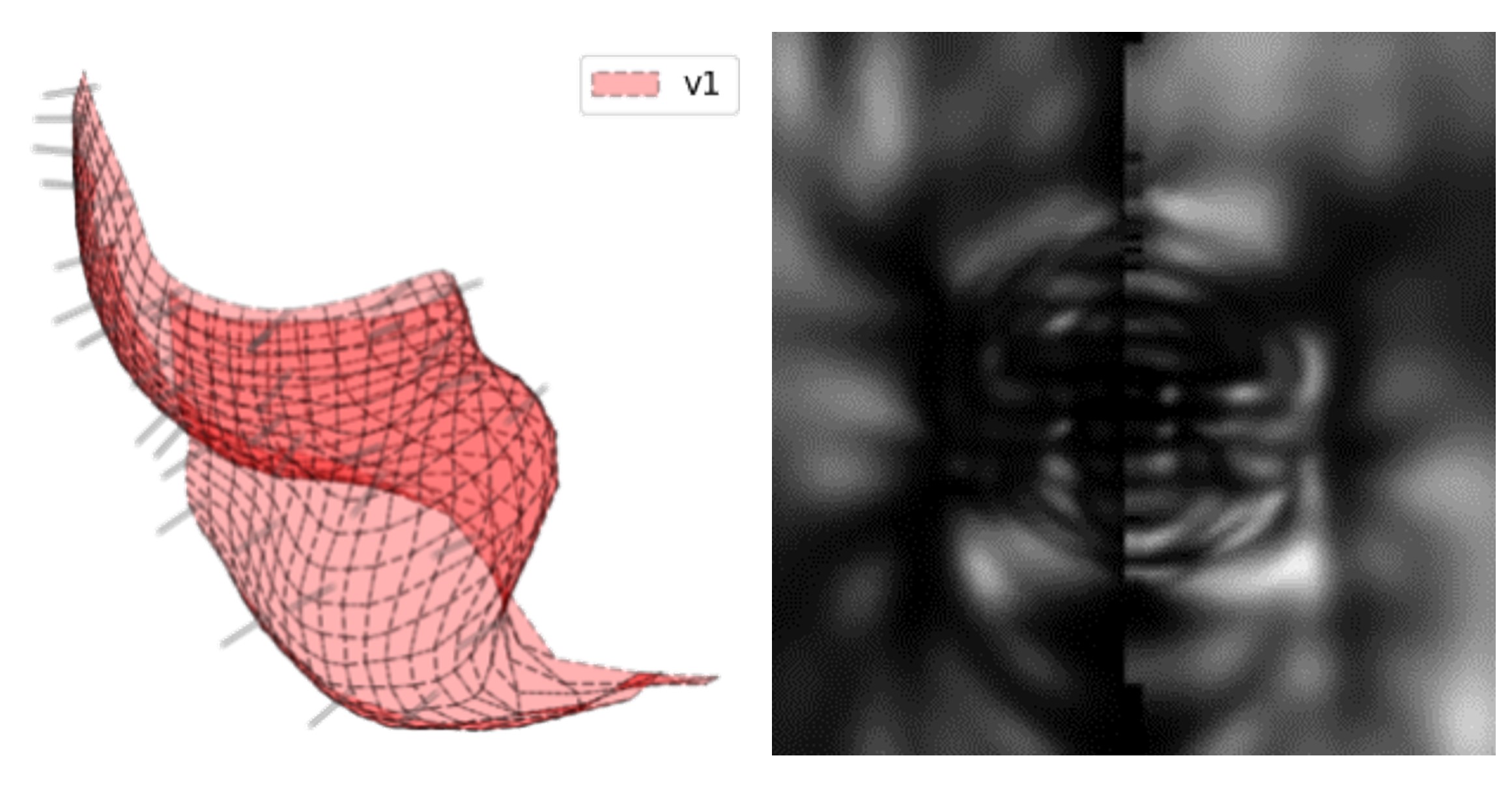

A model of ganglion axon pathways accounts for percepts elicited by retinal implants

We show that the perceptual experience of retinal implant users can be accurately predicted using a computational model that simulates each individual patient’s retinal ganglion axon pathways.

Michael Beyeler, Devyani Nanduri, James D. Weiland, Ariel Rokem, Geoffrey M. Boynton, Ione Fine Scientific Reports 9(1):9199

Learning to see again: Biological constraints on cortical plasticity and the implications for sight restoration technologies

The goal of this review is to summarize the vast basic science literature on developmental and adult cortical plasticity with an emphasis on how this literature might relate to the field of prosthetic vision.

Michael Beyeler, Ariel Rokem, Geoffrey M. Boynton, Ione Fine Journal of Neural Engineering 14(5)

pulse2percept: A Python-based simulation framework for bionic vision

pulse2percept is an open-source Python simulation framework used to predict the perceptual experience of retinal prosthesis patients across a wide range of implant configurations.

Michael Beyeler, Geoffrey M. Boynton, Ione Fine, Ariel Rokem Python in Science Conference (SciPy) ‘17