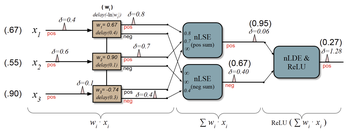

We propose a novel temporal-digital architecture that encodes ANN weights as delays and activations as signal arrival times, enabling full ANN execution with temporal reuse, noise-tolerant summation, and hybrid memory, achieving up to 11× energy and 4× latency improvements over SNNs, and 3.5× energy savings over 8-bit digital systolic arrays.

Event-Based Vision at the Edge

Neuromorphic event‐based vision sensors are poised to dramatically improve the latency, robustness and power in applications ranging from smart sensing to autonomous driving and assistive technologies for people who are blind.

Soon these sensors may power low vision aids and retinal implants, where the visual scene has to be processed quickly and efficiently before it is displayed. However, novel methods are needed to process the unconventional output of these sensors in order to unlock their potential.

Project Affiliate:

Principal Investigator:

Assistant Professor

Faculty Research Grant:

Event-based scene understanding for bionic vision

PI: Michael Beyeler (UCSB)

July 2021 - June 2022

Academic Senate

University of California, Santa Barbara (UCSB)

Publications

Single spike artificial neural networks

Rhys Gretsch, Michael Beyeler, Jeremy Lau, Timothy Sherwood International Symposium on Computer Architecture (ISCA) ‘25

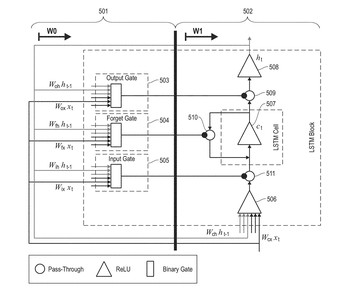

Long-short term memory (LSTM) cells on spiking neuromorphic hardware

We present a way to implement long short-term memory (LSTM) cells on spiking neuromorphic hardware.

Rathinakumar Appuswamy, Michael Beyeler, Pallab Datta, Myron Flickner, Dharmendra S Modha US Patent No. 11,636,317

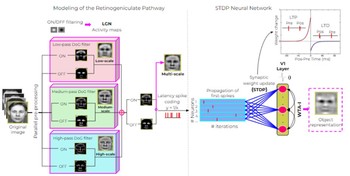

Efficient multi-scale representation of visual objects using a biologically plausible spike-latency code and winner-take-all inhibition

We present a SNN model that uses spike-latency coding and winner-take-all inhibition to efficiently represent visual objects with as little as 15 spikes per neuron.

Melani Sanchez-Garcia, Tushar Chauhan, Benoit R. Cottereau, Michael Beyeler Biological Cybernetics

(Note: MSG and TC are co-first authors. BRC and MB are co-last authors.)

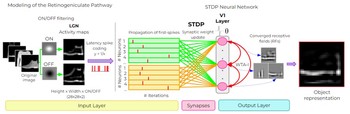

Efficient visual object representation using a biologically plausible spike-latency code and winner-take-all inhibition

We present a SNN model that uses spike-latency coding and winner-take-all inhibition to efficiently represent visual stimuli from the Fashion MNIST dataset.

Melani Sanchez-Garcia, Tushar Chauhan, Benoit R. Cottereau, Michael Beyeler NeuroVision Workshop, IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR) ‘22

A GPU-accelerated cortical neural network model for visually guided robot navigation

We present a cortical neural network model for visually guided navigation that has been embodied on a physical robot exploring a real-world environment. The model includes a rate based motion energy model for area V1, and a spiking neural network model for cortical area MT. The model generates a cortical representation of optic flow, determines the …

Michael Beyeler, Nicolas Oros, Nikil Dutt, Jeffrey L. Krichmar Neural Networks 72: 75-87