Efficient multi-scale representation of visual objects using a biologically plausible spike-latency code and winner-take-all inhibition

(Note: MSG and TC are co-first authors. BRC and MB are co-last authors.)

Abstract

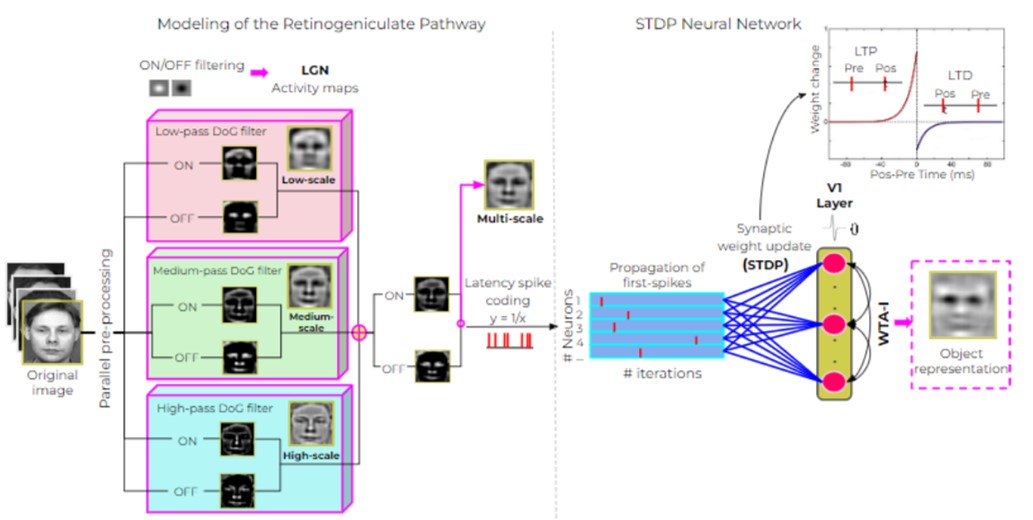

Deep neural networks have surpassed human performance in key visual challenges such as object recognition, but require a large amount of energy, computation, and memory. In contrast, spiking neural networks (SNNs) have the potential to improve both the efficiency and biological plausibility of object recognition systems. Here we present a SNN model that uses spike-latency coding and winner-take-all inhibition (WTA-I) to efficiently represent visual stimuli using multi-scale parallel processing. Mimicking neuronal response properties in early visual cortex, images were preprocessed with three different spatial frequency (SF) channels, before they were fed to a layer of spiking neurons whose synaptic weights were updated using spike-timing-dependent-plasticity (STDP). We investigate how the quality of the represented objects changes under different SF bands and WTA-I schemes. We demonstrate that a network of 200 spiking neurons tuned to three SFs can efficiently represent objects with as little as 15 spikes per neuron. Studying how core object recognition may be implemented using biologically plausible learning rules in SNNs may not only further our understanding of the brain, but also lead to novel and efficient artificial vision systems.