Rather than predicting perceptual distortions, one needs to solve the inverse problem: What is the best stimulus to generate a desired visual percept?

Jacob Granley

(he/him)

Postdoctoral Researcher

Computer Science

University of California, Santa Barbara

Jacob Granley is a Postdoctoral Researcher in the Department of Computer Science.

Prior to joining UCSB, he received his Master’s and Bachelor’s in Computer Science from Colorado School of Mines. He pursued his PhD under Dr. Beyeler as part of the Bionic Vision lab, where he researches how modeling, deep learning, and human in the loop optimization can be used to improve stimulus encoding in neural interfaces.

Outside of research he enjoys rock climbing and camping.

- 3205 BioEngineering

- jgranley@ucsb.edu

Education

-

PhD in Computer Science, 2025

University of California, Santa Barbara

-

MS in Computer Science, 2020

University of Colorado, School of Mines

-

BS in Computer Science, 2019

University of Colorado, School of Mines

In the News

Project Lead

Predicting Visual Outcomes for Visual Prostheses

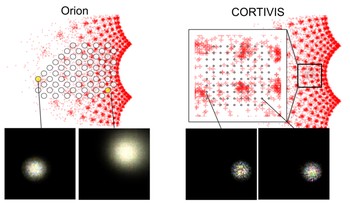

What do visual prosthesis users see, and why? Clinical studies have shown that the vision provided by current devices differs substantially from normal sight.

pulse2percept: A Python-Based Simulation Framework for Bionic Vision

pulse2percept is an open-source Python simulation framework used to predict the perceptual experience of retinal prosthesis patients across a wide range of implant configurations.

Project Affiliate

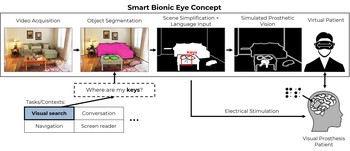

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision, we might be better off thinking about how to create practical and useful artificial vision now.

Publications

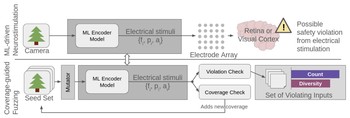

Fuzzing the brain: Automated stress testing for the safety of ML-driven neurostimulation

We propose a systematic, quantitative approach to detect and characterize unsafe stimulation patterns in ML-driven neurostimulation systems.

Mara Downing, Matthew Peng, Jacob Granley, Michael Beyeler, Tevfik Bultan arXiv:2512.05383

Deep learning-based control of electrically evoked activity in human visual cortex

We developed a data-driven neural control framework for a visual cortical prosthesis in a blind human, showing that deep learning can synthesize efficient, stable stimulation patterns that reliably evoke percepts and outperform conventional calibration methods.

Pehuén Moure, Jacob Granley, Fabrizio Grani, Leili Soo, Antonio Lozano, Rocio López-Peco, Adrián Villamarin-Ortiz, Cristina Soto-Sánchez, Shih-Chii Liu, Michael Beyeler, Eduardo Fernández bioRxiv

(Note: PM, JG, and FG are co-first authors. SL, MB, and EF are co-last authors.)

Evaluating deep human-in-the-loop optimization for retinal implants using sighted participants

We evaluate HILO using sighted participants viewing simulated prosthetic vision to assess its ability to optimize stimulation strategies under realistic conditions.

Eirini Schoinas, Adyah Rastogi, Anissa Carter, Jacob Granley, Michael Beyeler IEEE EMBC ‘25

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)

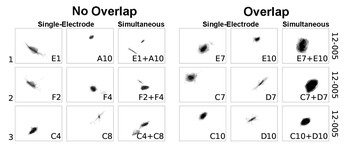

Axonal stimulation affects the linear summation of single-point perception in three Argus II users

We retrospectively analyzed phosphene shape data collected form three Argus II patients to investigate which neuroanatomical and stimulus parameters predict paired-phosphene appearance and whether phospehenes add up linearly.

Yuchen Hou, Devyani Nanduri, Jacob Granley, James D. Weiland, Michael Beyeler Journal of Neural Engineering

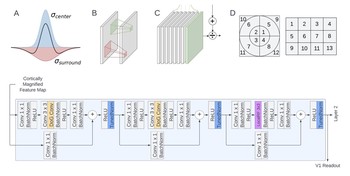

Explaining V1 properties with a biologically constrained deep learning architecture

We systematically incorporated neuroscience-derived architectural components into CNNs to identify a set of mechanisms and architectures that comprehensively explain neural activity in V1.

Galen Pogoncheff, Jacob Granley, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

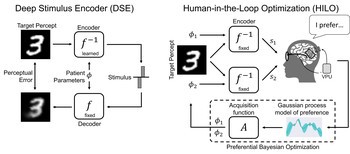

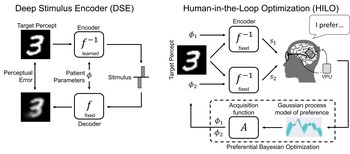

Human-in-the-loop optimization for deep stimulus encoding in visual prostheses

We propose a personalized stimulus encoding strategy that combines state-of-the-art deep stimulus encoding with preferential Bayesian optimization.

Jacob Granley, Tristan Fauvel, Matthew Chalk, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

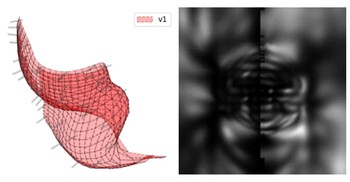

Adapting brain-like neural networks for modeling cortical visual prostheses

We show that a neurologically-inspired decoding of CNN activations produces qualitatively accurate phosphenes, comparable to phosphenes reported by real patients.

Jacob Granley, Alexander Riedel, Michael Beyeler Shared Visual Representations in Human & Machine Intelligence (SVRHM) Workshop, NeurIPS ‘22

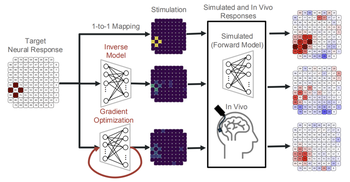

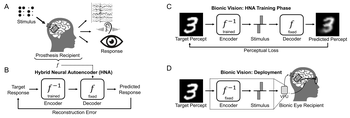

Hybrid neural autoencoders for stimulus encoding in visual and other sensory neuroprostheses

What is the required stimulus to produce a desired percept? Here we frame this as an end-to-end optimization problem, where a deep neural network encoder is trained to invert a known, fixed forward model that approximates the underlying biological system.

Jacob Granley, Lucas Relic, Michael Beyeler 36th Conference on Neural Information Processing Systems (NeurIPS) ‘22

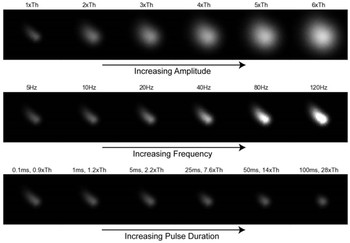

A computational model of phosphene appearance for epiretinal prostheses

We present a phenomenological model that predicts phosphene appearance as a function of stimulus amplitude, frequency, and pulse duration.

Jacob Granley, Michael Beyeler IEEE Engineering in Medicine and Biology Society Conference (EMBC) ‘21

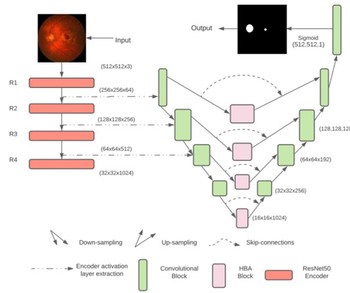

U-Net with hierarchical bottleneck attention for landmark detection in fundus images of the degenerated retina

We propose HBA-U-Net: a U-Net backbone with hierarchical bottleneck attention to highlight retinal abnormalities that may be important for fovea and optic disc segmentation in the degenerated retina.

Shuyun Tang, Ziming Qi, Jacob Granley, Michael Beyeler MICCAI Workshop on Ophthalmic Image Analysis - OMIA ‘21