Understanding the visual system in health and disease is a key issue for neuroscience and neuroengineering applications such as visual prostheses.

Lucas is currently a PhD student in the Interdepartmental Graduate Program in Dynamical Neuroscience (DYNS) at UC Santa Barbara, where he investigate novel ways to approach cortical implants for the blind. Being blind himself since the age of five, Lucas' interests are broad, ranging from neuroscience to accessibility, but can be summarized as efforts to improve the lives of blind people around the world.

Lucas got his BS in Computer Science with a minor in Neuroscience from the University of Minnesota in 2021, where he performed research related to autonomous navigation, computer vision and brain-computer interfaces. Later, he got his MS in Biomedical Engineering from Carnegie Mellon University, where his primary work focused on analyzing top-down pathways of the visual system and how this could be integrated into cortical implants for the blind. In addition, he worked with the Human-Computer Interaction department on issues related to accessibility in data visualization, an area that he still seeks to explore in the future.

Outside of the lab, most of his free time is occupied by music, traveling and searching for audio-described content.

- 3205 BioEngineering

- lgilnadolskis@ucsb.edu

Education

-

PhD in Dynamical Neuroscience, 2028 (expected)

University of California, Santa Barbara

-

MS in Computational Biomedical Engineering, 2023

Carnegie Mellon University, Pittsburgh, PA

-

BS in Computer Science, 2021

University of Minnesota-Twin Cities

Project Lead

Project Affiliate

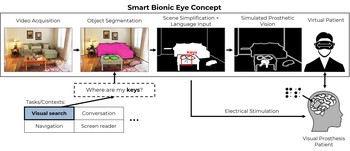

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision, we might be better off thinking about how to create practical and useful artificial vision now.

Understanding the Information Needs of People Who Are Blind or Visually Impaired

A nuanced understanding of the strategies that people who are blind or visually impaired employ to perform different instrumental activities of daily living (iADLs) is essential to the success of future visual accessibility aids.

Assistive Technologies for People Who Are Blind

This research explores the integration of computer vision into various assistive devices, aiming to enhance urban navigation and environmental interaction for individuals who are blind or visually impaired.

Publications

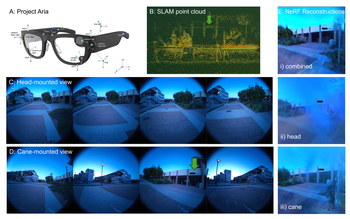

Beyond physical reach: Comparing head- and cane-mounted cameras for last-mile navigation by blind users

We evaluate head- and cane-mounted cameras for blind navigation and show that combining both yields superior spatial perception, guiding the design of hybrid, user-aligned assistive systems.

Apurv Varshney, Lucas Nadolskis, Tobias Höllerer, Michael Beyeler arXiv:2504.19345

Aligning visual prosthetic development with implantee needs

Our interview study found a significant gap between researcher expectations and implantee experiences with visual prostheses, underscoring the importance of focusing future research on usability and real-world application.

Lucas Nadolskis, Lily M. Turkstra, Ebenezer Larnyo, Michael Beyeler Translational Vision Science & Technology (TVST) 13(28)

(Note: LN and LMT contributed equally to this work.)

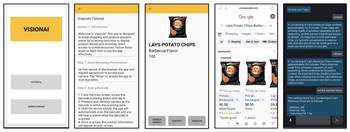

VisionAI - Shopping Assistance for People with Vision Impairments

We introduce VisionAI, a mobile application designed to enhance the in-store shopping experience for individuals with vision impairments.

Anika Arora, Lucas Nadolskis, Michael Beyeler, Misha Sra 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

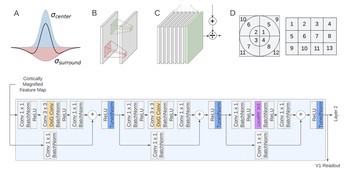

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)