We present a network-adaptive pipeline for cloud-assisted visual preprocessing of artificial vision, where real-time round-trip-time (RTT) feedback is used to dynamically modulate image resolution, compression, and transmission rate, explicitly prioritizing temporal continuity under adverse network conditions.

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision (which may remain elusive until we fully understand the neural code of vision), we might be better off thinking about how to create practical and useful artificial vision now. Specifically, a visual prosthesis has the potential to provide visual augmentations through the means of artificial intelligence (AI) based scene understanding (e.g., by highlighting important objects), tailored to specific real-world tasks that are known to affect the quality of life of people who are blind (e.g., face recognition, outdoor navigation, self-care).

In the future, these visual augmentations could be combined with GPS to give directions, warn users of impending dangers in their immediate surroundings, or even extend the range of visible light with the use of an infrared sensor (think bionic night-time vision). Once the quality of the generated artificial vision reaches a certain threshold, there are a lot of exciting avenues to pursue.

For many visually impaired, no effective treatments exist. With the help of a $1.5M grant, #UCSB's @ProfBeyeler will work to create an AI-powered bionic eye to generate artificial vision and increase the quality of life for millions affected by blindness. https://t.co/bdj9geIVYw

— UC Santa Barbara (@ucsantabarbara) October 7, 2022

Project Lead:

PhD Student

Project Affiliates:

PhD Candidate

Postdoctoral Researcher

PhD Student

PhD Student

PhD Student

Principal Investigator:

Associate Professor

Collaborator:

Professor

Universidad Miguel Hernández, Spain

DP2-LM014268:

Towards a Smart Bionic Eye: AI-Powered Artificial Vision for the Treatment of Incurable Blindness

PI: Michael Beyeler (UCSB)

September 2022 - August 2027

Common Fund, Office of the Director (OD); National Library of Medicine (NLM)

National Institutes of Health (NIH)

Publications

Network-adaptive cloud preprocessing for visual neuroprostheses

Jiayi Liu, Yilin Wang, Michael Beyeler arXiv

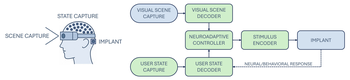

Bionic vision as neuroadaptive XR: Closed-loop perceptual interfaces for neurotechnology

Rather than pursuing a (degraded) imitation of natural sight, bionic vision might be better understood as a form of neuroadaptive XR: a perceptual interface that forgoes visual fidelity in favor of delivering sparse, personalized cues shaped (at its full potential) by user intent, behavioral context, and cognitive state.

Michael Beyeler 2025 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

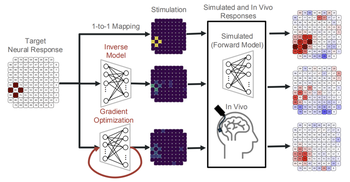

Deep learning-based control of electrically evoked activity in human visual cortex

We developed a data-driven neural control framework for a visual cortical prosthesis in a blind human, showing that deep learning can synthesize efficient, stable stimulation patterns that reliably evoke percepts and outperform conventional calibration methods.

Pehuén Moure, Jacob Granley, Fabrizio Grani, Leili Soo, Antonio Lozano, Rocio López-Peco, Adrián Villamarin-Ortiz, Cristina Soto-Sánchez, Shih-Chii Liu, Michael Beyeler, Eduardo Fernández bioRxiv

(Note: PM, JG, and FG are co-first authors. SL, MB, and EF are co-last authors.)

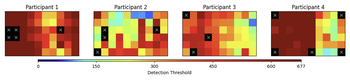

Efficient spatial estimation of perceptual thresholds for retinal implants via Gaussian process regression

We propose a Gaussian Process Regression (GPR) framework to predict perceptual thresholds at unsampled locations while leveraging uncertainty estimates to guide adaptive sampling.

Roksana Sadeghi, Michael Beyeler IEEE EMBC ‘25

Evaluating deep human-in-the-loop optimization for retinal implants using sighted participants

We evaluate HILO using sighted participants viewing simulated prosthetic vision to assess its ability to optimize stimulation strategies under realistic conditions.

Eirini Schoinas, Adyah Rastogi, Anissa Carter, Jacob Granley, Michael Beyeler IEEE EMBC ‘25

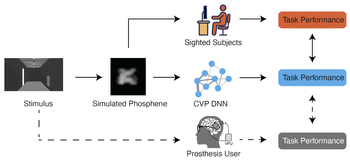

A deep learning framework for predicting functional visual performance in bionic eye users

We introduce a computational virtual patient (CVP) pipeline that integrates anatomically grounded phosphene simulation with task-optimized deep neural networks to forecast patient perceptual capabilities across diverse prosthetic designs and tasks.

Jonathan Skaza, Shravan Murlidaran, Apurv Varshney, Ziqi Wen, William Wang, Miguel P. Eckstein, Michael Beyeler bioRxiv

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)

A systematic review of extended reality (XR) for understanding and augmenting vision loss

We present a systematic literature review of 227 publications from 106 different venues assessing the potential of XR technology to further visual accessibility.

Justin Kasowski, Byron A. Johnson, Ryan Neydavood, Anvitha Akkaraju, Michael Beyeler Journal of Vision 23(5):5, 1–24

(Note: JK and BAJ are co-first authors.)

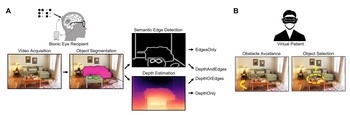

The relative importance of depth cues and semantic edges for indoor mobility using simulated prosthetic vision in immersive virtual reality

We used a neurobiologically inspired model of simulated prosthetic vision in an immersive virtual reality environment to test the relative importance of semantic edges and relative depth cues to support the ability to avoid obstacles and identify objects.

Alex Rasla, Michael Beyeler 28th ACM Symposium on Virtual Reality Software and Technology (VRST) ‘22

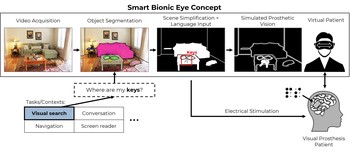

Towards a Smart Bionic Eye: AI-powered artificial vision for the treatment of incurable blindness

Rather than aiming to represent the visual scene as naturally as possible, a Smart Bionic Eye could provide visual augmentations through the means of artificial intelligence–based scene understanding, tailored to specific real-world tasks that are known to affect the quality of life of people who are blind.

Michael Beyeler, Melani Sanchez-Garcia Journal of Neural Engineering

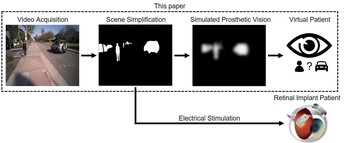

Deep learning-based scene simplification for bionic vision

We combined deep learning-based scene simplification strategies with a psychophysically validated computational model of the retina to generate realistic predictions of simulated prosthetic vision.

Nicole Han, Sudhanshu Srivastava, Aiwen Xu, Devi Klein, Michael Beyeler ACM Augmented Humans (AHs) ‘21