A nuanced understanding of the strategies that people who are blind or visually impaired employ to perform different instrumental activities of daily living (iADLs) is essential to the success of future visual accessibility aids.

Lily M. Turkstra

(she/her)

PhD Student

Psychological & Brain Sciences

University of California, Santa Barbara

Lily Turkstra is a PhD student in the Psychological and Brain Sciences department at UCSB, where she studies how various neural and psychological states impact prosthetic vision. Her research explores how modulating these states may improve perceptual outcomes and user experiences with visual prostheses.

Lily obtained her B.S. in Psychology and minors in Biology and Music from Cal Poly, San Luis Obispo, where she also conducted psychoacoustic research in the Multisensory Perception Lab. She worked as the lab manager of the Bionic Vision Lab before beginning her PhD, studying assistive technology use among individuals with visual impairments. She has also worked as a behavioral therapist supporting children who are non-speaking and conducted research exploring the effects of spaceflight on the brain.

Outside of the lab, she enjoys tidepooling, playing the bass, and biking around Santa Barbara.

- 3205 BioEngineering

- lturkstra@ucsb.edu

Honors & Awards

- NEI Early Career Travel Grant, Annual Meeting of the Vision Sciences Society (VSS), St. Pete’s Beach, FL (2024)

- Travel Grant, Functional Vision & Accessibility (FVA), SKERI, San Francisco, CA (2023)

- Travel Grant, The Eye & The Chip, Detroit, MI (2023)

Education

-

PhD in Psychological & Brain Sciences, 2028 (expected)

University of California, Santa Barbara

-

BS in Research Psychology, 2022

California Polytechnic State University (CalPoly), San Luis Obispo, CA

Project Affiliate

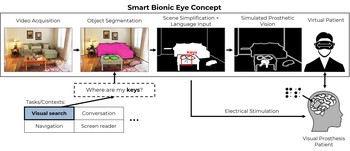

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision, we might be better off thinking about how to create practical and useful artificial vision now.

Publications

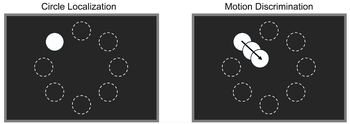

Gamification enhances user engagement and task performance in prosthetic vision testing

We found that gamification can influence measured performance and user experience in prosthetic vision testing, but benefits are not universal and depend on task demands and cognitive load.

Lily M. Turkstra, Byron A. Johnson, Arathy Kartha, Gislin Dagnelie, Michael Beyeler medRxiv

(Note: LMT and BAJ contributed equally to this work.)

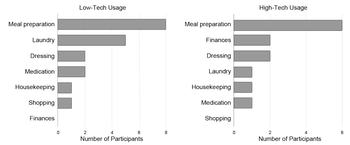

Assistive technology use in domestic activities by people who are blind

We present insights from 16 semi-structured interviews with individuals who are either legally or completely blind, highlighting both the current use and potential future applications of technologies for home-based iADLs.

Lily M. Turkstra, Tanya Bhatia, Alexa Van Os, Michael Beyeler Scientific Reports

Aligning visual prosthetic development with implantee needs

Our interview study found a significant gap between researcher expectations and implantee experiences with visual prostheses, underscoring the importance of focusing future research on usability and real-world application.

Lucas Nadolskis, Lily M. Turkstra, Ebenezer Larnyo, Michael Beyeler Translational Vision Science & Technology (TVST) 13(28)

(Note: LN and LMT contributed equally to this work.)

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)