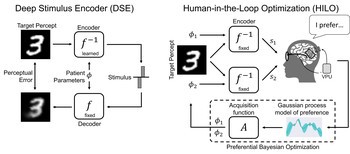

Rather than predicting perceptual distortions, one needs to solve the inverse problem: What is the best stimulus to generate a desired visual percept?

Galen Pogoncheff

(he/him)

PhD Candidate

Computer Science

University of California, Santa Barbara

Galen Pogoncheff is a Computer Science PhD candidate researching how behavioral and processing biases in biological neural systems can inform the development of human-centered deep learning systems. He brings industry experience researching and developing machine learning models for neural interfaces, integrating multimodal signal data for real-time decoding of motor intent and cognitive state.

Prior to this work, Galen completed his B.S. and M.S. in Computer Science at the University of Colorado, specializing in Data Science and Engineering.

Outside of the lab, you can find Galen in the mountains or at the gym.

- 3205 BioEngineering

- galenpogoncheff@ucsb.edu

Honors & Awards

- CCS Community Fellowship (2026)

- Meta Summer Internship (2024, 2026)

- Outstanding TA Award, CS, UCSB (2024)

Education

-

PhD in Computer Science, 2027 (expected)

University of California, Santa Barbara

-

MS in Computer Science, 2020

University of Colorado, Boulder

-

BS in Computer Science, 2018

University of Colorado, Boulder

Project Lead

NeuroAI Models of the Visual System

Understanding the visual system in health and disease is a key issue for neuroscience and neuroengineering applications such as visual prostheses.

Project Affiliate

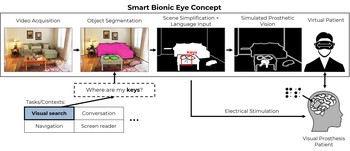

Towards a Smart Bionic Eye

Rather than aiming to one day restore natural vision, we might be better off thinking about how to create practical and useful artificial vision now.

Publications

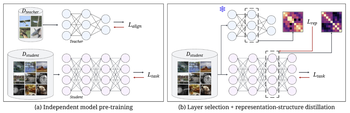

BIRD: Behavior induction via representation-structure distillation

We introduce BIRD (Behavior Induction via Representation-structure Distillation), a flexible framework for transferring aligned behavior by matching the internal representation structure of a student model to that of a teacher.

Galen Pogoncheff, Michael Beyeler The 14th International Conference on Learning Representations (ICLR ‘26)

Beyond sight: Probing alignment between image models and blind V1

We present a series of analyses on the shared representations between evoked neural activity in the primary visual cortex of a blind human with an intracortical visual prosthesis, and latent visual representations computed in deep neural networks.

Jacob Granley, Galen Pogoncheff, Alfonso Rodil, Leili Soo, Lily M. Turkstra, Lucas Nadolskis, Arantxa Alfaro Saez, Cristina Soto Sanchez, Eduardo Fernandez Jover, Michael Beyeler Workshop on Representational Alignment (Re-Align), ICLR ‘24

(Note: JG and GP contributed equally to this work.)

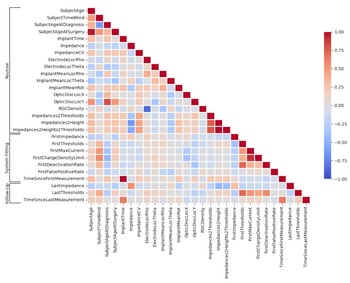

Explainable machine learning predictions of perceptual sensitivity for retinal prostheses

We present explainable artificial intelligence (XAI) models fit on a large longitudinal dataset that can predict perceptual thresholds on individual Argus II electrodes over time.

Galen Pogoncheff, Zuying Hu, Ariel Rokem, Michael Beyeler Journal of Neural Engineering

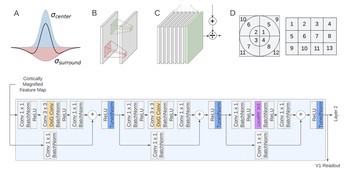

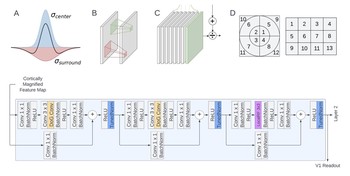

Explaining V1 properties with a biologically constrained deep learning architecture

We systematically incorporated neuroscience-derived architectural components into CNNs to identify a set of mechanisms and architectures that comprehensively explain neural activity in V1.

Galen Pogoncheff, Jacob Granley, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23