How does the brain extract relevant visual features from the rich, dynamic visual input that typifies active exploration, and how does the neural representation of these features support visual navigation?

Yuchen Hou

(she/her/hers)

PhD Candidate

Psychological & Brain Sciences

University of California, Santa Barbara

Yuchen Hou is a PhD Candidate in Computer Science at UC Santa Barbara. She is interested in computational neuroscience and machine learning. Her research goal is to model the dynamics of brain functions by integrating knowledge from computer, cognitive, and neural science.

Prior to pursuing her PhD studies, she was an undergraduate research assistant in the Bionic Vision Lab with a BS degree in Psychological & Brain Sciences.

In her free time, she likes reading fiction books and watching action movies.

- 3205 BioEngineering

- yuchenhou@ucsb.edu

Honors & Awards

- Google Summer Internship (2026)

- Exceptional Academic Performance Award, PBS, UCSB (2022)

- Abdullah & Marjorie R. Nasser Memorial Scholarship Fund Award, PBS, UCSB (2022)

Education

-

PhD in Computer Science, 2027 (expected)

University of California, Santa Barbara

-

BS in Psychological & Brain Sciences, 2022

University of California, Santa Barbara

Project Lead

Project Affiliate

NeuroAI Models of the Visual System

Understanding the visual system in health and disease is a key issue for neuroscience and neuroengineering applications such as visual prostheses.

Publications

Mouse vs. AI: A neuroethological benchmark for visual robustness and neural alignment

We propose the Mouse vs. AI: Robust Foraging Competition at NeurIPS ‘25, a novel bioinspired visual robustness benchmark to test generalization in reinforcement learning (RL) agents trained to navigate a virtual environment toward a visually cued target.

Marius Schneider, Joe Canzano, Jing Peng, Yuchen Hou, Spencer LaVere Smith, Michael Beyeler arXiv:2509.14446

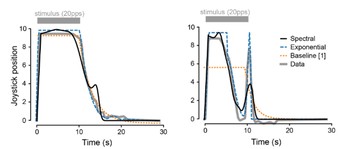

Predicting the temporal dynamics of prosthetic vision

We introduce two computational models designed to accurately predict phosphene fading and persistence under varying stimulus conditions, cross-validated on behavioral data reported by nine users of the Argus II Retinal Prosthesis System.

Yuchen Hou, Laya Pullela, Jiaxin Su, Sriya Aluru, Shivani Sista, Xiankun Lu, Michael Beyeler IEEE EMBC ‘24

(Note: YH and LP contributed equally to this work.)

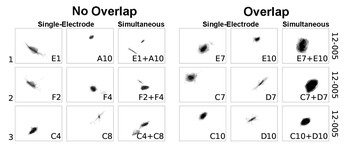

Axonal stimulation affects the linear summation of single-point perception in three Argus II users

We retrospectively analyzed phosphene shape data collected form three Argus II patients to investigate which neuroanatomical and stimulus parameters predict paired-phosphene appearance and whether phospehenes add up linearly.

Yuchen Hou, Devyani Nanduri, Jacob Granley, James D. Weiland, Michael Beyeler Journal of Neural Engineering

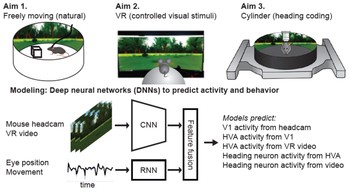

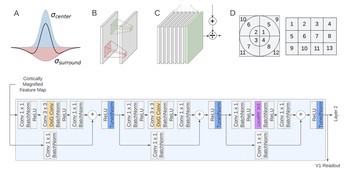

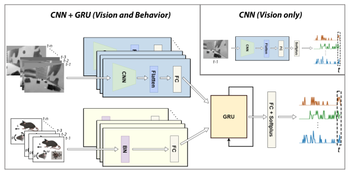

Multimodal deep learning model unveils behavioral dynamics of V1 activity in freely moving mice

We introduce a multimodal recurrent neural network that integrates gaze-contingent visual input with behavioral and temporal dynamics to explain V1 activity in freely moving mice.

Aiwen Xu, Yuchen Hou, Cristopher M. Niell, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23