We present insights from 16 semi-structured interviews with individuals who are either legally or completely blind, highlighting both the current use and potential future applications of technologies for home-based iADLs.

Understanding the Information Needs of People Who Are Blind or Visually Impaired

The goal of this project is to obtain a nuanced understanding of the strategies that people who are blind or visually impaired (BVI) employ to perform different instrumental activities of daily living (iADLs).

Identifying useful and relevant visual cues that could support these iADLs, especially when the task involves some level of scene understanding, orientation, and mobility, will be essential to the success of near-future visual accessibility aids.

Project Lead:

PhD Student

Project Affiliates:

PhD Student

Principal Investigator:

Associate Professor

Publications

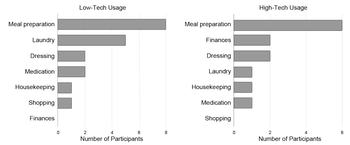

Assistive technology use in domestic activities by people who are blind

Lily M. Turkstra, Tanya Bhatia, Alexa Van Os, Michael Beyeler Scientific Reports

Aligning visual prosthetic development with implantee needs

Our interview study found a significant gap between researcher expectations and implantee experiences with visual prostheses, underscoring the importance of focusing future research on usability and real-world application.

Lucas Nadolskis, Lily M. Turkstra, Ebenezer Larnyo, Michael Beyeler Translational Vision Science & Technology (TVST) 13(28)

(Note: LN and LMT contributed equally to this work.)

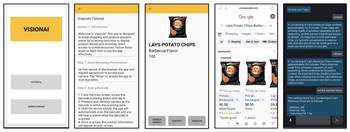

VisionAI - Shopping Assistance for People with Vision Impairments

We introduce VisionAI, a mobile application designed to enhance the in-store shopping experience for individuals with vision impairments.

Anika Arora, Lucas Nadolskis, Michael Beyeler, Misha Sra 2024 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct)

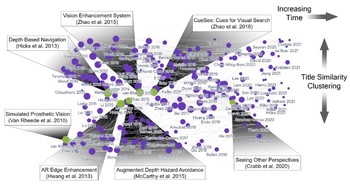

A systematic review of extended reality (XR) for understanding and augmenting vision loss

We present a systematic literature review of 227 publications from 106 different venues assessing the potential of XR technology to further visual accessibility.

Justin Kasowski, Byron A. Johnson, Ryan Neydavood, Anvitha Akkaraju, Michael Beyeler Journal of Vision 23(5):5, 1–24

(Note: JK and BAJ are co-first authors.)