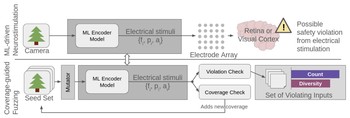

We propose a systematic, quantitative approach to detect and characterize unsafe stimulation patterns in ML-driven neurostimulation systems.

End-to-End Optimization of Bionic Vision

Our lack of understanding of multi-electrode interactions severely limits current stimulation protocols. For example, current Argus II protocols simply attempt to minimize electric field interactions by maximizing phase delays across electrodes using ‘time-multiplexing’. The assumption is that single-electrode percepts act as atomic ‘building blocks’ of patterned vision. However, these building blocks often fail to assemble into more complex percepts.

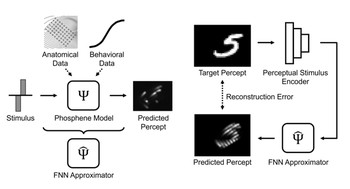

The goal of this project is therefore to develop new stimulation strategies that minimize perceptual distortions. One potential avenue is to view this as an end-to-end optimization problem, where a deep neural network (encoder) is trained to predict the electrical stimulus needed to produce a desired percept (target).

Importantly, this model would have to be trained with the phosphene model in the loop, such that the overall network would minimize a perceptual error between the predicted and target output. This is technically challenging, because a phosphene model must be:

- simple enough to be differentiable such that it can be included in the backward pass of a deep neural network,

- complex enough to be able to explain the spatiotemporal perceptual distortions observed in real prosthesis patients, and

- amenable to an efficient implementation such that the training of the network is feasible.

Project Lead:

Postdoctoral Researcher

Project Affiliate:

Principal Investigator:

Associate Professor

Collaborator:

Professor

Universidad Miguel Hernández, Spain

DP2-LM014268:

Towards a Smart Bionic Eye: AI-Powered Artificial Vision for the Treatment of Incurable Blindness

PI: Michael Beyeler (UCSB)

September 2022 - August 2027

Common Fund, Office of the Director (OD); National Library of Medicine (NLM)

National Institutes of Health (NIH)

Publications

Fuzzing the brain: Automated stress testing for the safety of ML-driven neurostimulation

Mara Downing, Matthew Peng, Jacob Granley, Michael Beyeler, Tevfik Bultan arXiv:2512.05383

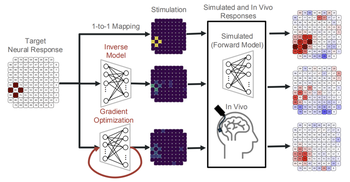

Deep learning-based control of electrically evoked activity in human visual cortex

We developed a data-driven neural control framework for a visual cortical prosthesis in a blind human, showing that deep learning can synthesize efficient, stable stimulation patterns that reliably evoke percepts and outperform conventional calibration methods.

Pehuén Moure, Jacob Granley, Fabrizio Grani, Leili Soo, Antonio Lozano, Rocio López-Peco, Adrián Villamarin-Ortiz, Cristina Soto-Sánchez, Shih-Chii Liu, Michael Beyeler, Eduardo Fernández bioRxiv

(Note: PM, JG, and FG are co-first authors. SL, MB, and EF are co-last authors.)

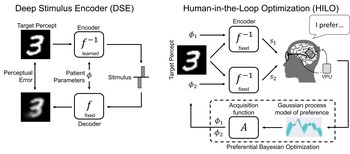

Human-in-the-loop optimization for deep stimulus encoding in visual prostheses

We propose a personalized stimulus encoding strategy that combines state-of-the-art deep stimulus encoding with preferential Bayesian optimization.

Jacob Granley, Tristan Fauvel, Matthew Chalk, Michael Beyeler 37th Conference on Neural Information Processing Systems (NeurIPS) ‘23

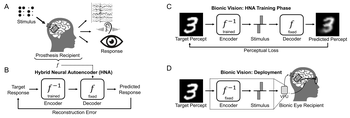

Hybrid neural autoencoders for stimulus encoding in visual and other sensory neuroprostheses

What is the required stimulus to produce a desired percept? Here we frame this as an end-to-end optimization problem, where a deep neural network encoder is trained to invert a known, fixed forward model that approximates the underlying biological system.

Jacob Granley, Lucas Relic, Michael Beyeler 36th Conference on Neural Information Processing Systems (NeurIPS) ‘22

Deep learning-based perceptual stimulus encoder for bionic vision

We propose a perceptual stimulus encoder based on convolutional neural networks that is trained in an end-to-end fashion to predict the electrode activation patterns required to produce a desired visual percept.

Lucas Relic, Bowen Zhang, Yi-Lin Tuan, Michael Beyeler ACM Augmented Humans (AHs) ‘22