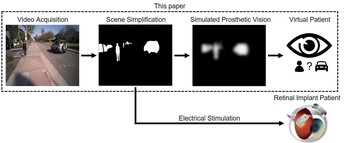

We combined deep learning-based scene simplification strategies with a psychophysically validated computational model of the retina to generate realistic predictions of simulated prosthetic vision.

PCMag: Building the bionic eye… with car tech?

Over the years, cyberpunk tales and sci-fi series have featured characters with cybernetic vision—most recently Star Trek Discovery’s Lieutenant Keyla Detmer and her ocular implants. In the real world, restoring “natural” vision is still a complex puzzle, though researchers at UC Santa Barbara are developing a smart prosthesis that provides cues to the visually impaired, much like a computer vision system talks to a self-driving car.

Today, over 10 million people worldwide are living with profound visual impairment, many due to retinal degeneration diseases. Ahead of this week’s Augmented Humans International Conference, we spoke with Dr. Michael Beyeler, Assistant Professor in Computer Science and Psychological & Brain Sciences at UCSB, who is forging ahead with synthetic sight trials at his Bionic Vision Lab and will be presenting a paper at the conference.

Read the full interview here.

Publications

Deep learning-based scene simplification for bionic vision

Nicole Han, Sudhanshu Srivastava, Aiwen Xu, Devi Klein, Michael Beyeler ACM Augmented Humans (AHs) ‘21